Difference between revisions of "Archived-DMM"

(→Quick Start) |

(→DMM Architecture) |

||

| Line 49: | Line 49: | ||

== DMM Architecture == | == DMM Architecture == | ||

| − | [[File:Dmm-framework-arc | + | [[File:Dmm-framework-arc.png|center|350px|DMM Simple Arch]] |

| + | |||

| + | The DMM framework provides posix socket APIs to the application. A protocol stack could be plugged into the DMM. DMM will choose the most suitable stack according to the RD policy. | ||

== Quick Start == | == Quick Start == | ||

Revision as of 10:12, 17 April 2018

| DMM Facts |

|

Project Lead: George Zhao

Repository: git clone https://gerrit.fd.io/r/dmm |

What is DMM

DMM (Dual Mode, Multi-protocol, Multi-instance) is to implement a transport agnostic framework for network applications that can

- Work with both user space and kernel space network stacks

- Use different network protocol stacks based on their functional and performance requirements (QOS)

- Work with multiple instances of a transport protocol stack

Use and engage or adopt a new protocol stack dynamically as applicable.

History

Emerging applications are bringing extremely high-performance requirements to their network systems. Eg. AR/VR, IoT etc. Many of these applications also have unique demands for QOS/SLA. Some application need a low latency network, some need high reliability, etc. Though such performance targets should be required for the complete communication system, the transport layer protocols play a key role and encounter a relatively higher challenge, because traditionally the TCP-based transport layer exploits the “best-effort” principle and inherently provides no performance guarantees. However, as internet applications rapidly grow and diversify, an all-powerful or one-size-fits-all protocol or algorithm becomes less feasible. Thus the traditional single-instance TCP-based network stack faces many challenges when serving applications with different QoS/SLA requirements simultaneously on the same platform.

Moving the networking stack out of the kernel is an occurring trend in both industry implementations and academic literature. Technologies like DPDK (and others) are improving performance of the network stack, bypassing the kernel and avoiding context-switching and data copies, as well as providing a complete set of packet-processing acceleration libraries.

Keeping the above trends in mind the DMM/nStack provides a framework where, system operators can plug in dedicated types of networking stack instances according to performance and/or functional requirements from the user space applications. The application need not consider changes to the transport layer API. A lightweight nStack management daemon is responsible for maintaining the stack instances and the app/socket-to-stack-mappings, which are provided via the orchestration/management interface.

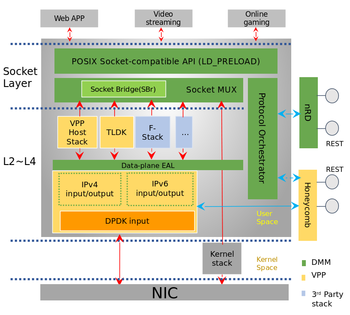

DMM Architecture

The DMM framework provides posix socket APIs to the application. A protocol stack could be plugged into the DMM. DMM will choose the most suitable stack according to the RD policy.

Quick Start

Environment Setup and testing app.

1. Download dmm code

# git clone https://gerrit.fd.io/r/dmm

2. Setup Vagrant VMs

# cd dmm/resources/extras # vagrant up

This steps will take a while. Since it download and compile and install dpdk, glog and dmm.

3. Log in to server/client

# vagrant ssh dmm-server

or

# vagrant ssh dmm-client

4. It'll create directory /dmm/app_example/app-test . Where user can test their client/server app. This directory contain two config file. module_config.json file says to use kernel tcp/ip protocol stack (Currently only supported stack, later we are going to support many other stack)

default_stack_name": "kernel",

"module_list": [

{

"stack_name": "kernel",

"function_name": "kernel_stack_register",

"libname": "./",

"loadtype": "static",

"deploytype": "1",

"maxfd": "1024",

"minfd": "0",

"priorty": "1",

"stackid": "0",

}

rd_config.json file describes rd policies. DMM provides dynamic mapping between apps (connections/sockets) and candidate networking stack instances. And this flexibility is achieved by the rd. nStack provides dynamic mapping between apps (connections/sockets) and candidate networking stack instances. And this flexibility is achieved by the rd. You can modify these files if required.

To know more about RD check in DMM_DeveloperManual.md [find this document in DMM repository https://gerrit.fd.io/r/dmm]

5. Copy your program executable in app-test directory. Run your program using LD_PRELOAD from app-test directory

# sudo LD_PRELOAD=../../release/lib64/libnStackAPI.so ./your_app

6. Post testing cleanup. After completing testing, issue the following command to clean up the vagrant VM's:

# vagrant destroy -f

Get Involved

- Bi-Weekly DMM Meeting.

- Join the DMM Mailing List.

- Join fdio-dmm IRC channel.

- Browse the code.

- 18.04 Release PlB00385223B00385223B00385223an

Reference

Enabling “Protocol Routing”: Revisiting Transport Layer Protocol Design in Internet Communications - [1]

Empower Diverse Open Transport Layer Protocol in the Cloud Networking By Qing Chang - [At FD.io mini summit https://wiki.fd.io/view/File:DMM_-_2018_FDIO_Mini_Summit_@LA_v2.pdf]