Difference between revisions of "CSIT/VPP-16.06 Test Report Draft"

Mackonstan (Talk | contribs) |

|||

| (15 intermediate revisions by 2 users not shown) | |||

| Line 2: | Line 2: | ||

==Introduction== | ==Introduction== | ||

| − | This report aims to provide a comprehensive and self-explanatory summary of all CSIT test cases that have been executed against FD.io VPP-16.06 code release, driven by the automated test infrastructure developed within the FD.io CSIT | + | This report aims to provide a comprehensive and self-explanatory summary of all CSIT test cases that have been executed against FD.io VPP-16.06 code release, driven by the automated test infrastructure developed within the FD.io CSIT project (FD.io Continuous System and Integration Testing). |

| − | CSIT source code for test suites is available in [https://git.fd.io/cgit/csit/log/?h=stable/1606 CSIT branch stable/1606] in the directory [https://git.fd.io/cgit/csit/tree/tests/suites?h=stable/1606 ./tests/suites/<name_of_the_test_suite>]. A local copy of CSIT source code can be obtained by cloning CSIT git repository (<nowiki>"git clone https://gerrit.fd.io/r/csit"</nowiki>). The CSIT testing virtual environment can be run on a local workstation/laptop/server using Vagrant by following the instructions in [https://wiki.fd.io/view/CSIT#CSIT_Tutorials CSIT tutorials]. | + | CSIT source code for the executed test suites is available in [https://git.fd.io/cgit/csit/log/?h=stable/1606 CSIT branch stable/1606] in the directory [https://git.fd.io/cgit/csit/tree/tests/suites?h=stable/1606 ./tests/suites/<name_of_the_test_suite>]. A local copy of CSIT source code can be obtained by cloning CSIT git repository (<nowiki>"git clone https://gerrit.fd.io/r/csit"</nowiki>). The CSIT testing virtual environment can be run on a local workstation/laptop/server using Vagrant by following the instructions in [https://wiki.fd.io/view/CSIT#CSIT_Tutorials CSIT tutorials]. |

| − | + | Followings sections provide brief description of CSIT performance and functional test suites executed against VPP-16.06 release (vpp branch stable/1606). Description of LF FD.io virtual and physical test environments is provided to aid anyone interested in reproducing the complete LF FD.io CSIT testing environment, in either virtual or physical test beds. The last two sections cover complete list of CSIT test suites and test cases executed against VPP-16.06 release (vpp branch stable/1606), with description and results per test case. | |

| − | + | ||

==Functional tests description== | ==Functional tests description== | ||

| − | Functional tests run on virtual testbeds which are created in VIRL running on a Cisco UCS C240 | + | Functional tests run on virtual testbeds which are created in VIRL running on a Cisco UCS C240 servers hosted in Linux Foundation labs. There is currently only one testbed topology being used for functional testing - a three node topology with two links between each pair of nodes as shown in this diagram: |

<pre> | <pre> | ||

+--------+ +--------+ | +--------+ +--------+ | ||

| Line 31: | Line 30: | ||

The following functional test suites are included in the CSIT-16.06 Release: | The following functional test suites are included in the CSIT-16.06 Release: | ||

| − | * Bridge Domain: Verification of untagged L2 Bridge Domain features | + | * '''L2 Cross-Connect switching:''' Verification of L2 cross connection for untagged and QinQ double stacked 802.1Q vlans. |

| − | * | + | * '''L2 Bridge-Domain switching:''' Verification of untagged L2 Bridge-Domain features. |

| − | + | * '''VXLAN:''' Verification of VXLAN tunnelling with L2 Bridge-Domain, L2 Cross-Connect and Ethernet untagged and 802.1Q VLAN tagged. | |

| − | + | * '''IPv4:''' Verification of IPV4 untagged features including arp, acl, icmp, forwarding, etc. | |

| − | + | * '''DHCP:''' Verification of DHCP client. | |

| − | * IPv4: Verification of IPV4 untagged features including arp, acl, icmp, forwarding, etc. | + | * '''IPv6:''' Verification of IPV6 untagged features including acl, icmpv6, forwarding, neighbor solicitation, etc. |

| − | * IPv6: Verification of IPV6 untagged features including acl, icmpv6, forwarding, neighbor solicitation, etc. | + | * '''COP:''' Verification of COP address security whitelisting and blacklisting features. |

| − | * | + | * '''GRE:''' Verification of GRE Tunnel Encapsulation. |

| − | * LISP: Verification of untagged LISP dataplane and API functionality | + | * '''LISP:''' Verification of untagged LISP dataplane and API functionality. |

| − | * | + | * '''Honeycomb:''' Verification of the Honeycomb control plane interface. |

==Performance tests description== | ==Performance tests description== | ||

| − | Performance tests run on physical testbeds | + | Performance tests run on physical testbeds hosted in Linux Foundation labs and consist of three Cisco UCS C240 servers each with 2x XEON CPUs (E5-2699v3 2.3GHz 18c). The logical testbed topology is fundamentally the same structure as the functional testbeds, but for any given tests, there is only a single link between each pair of nodes as shown in this diagram: |

<pre> | <pre> | ||

+--------+ +--------+ | +--------+ +--------+ | ||

| Line 58: | Line 57: | ||

+-------+ | +-------+ | ||

</pre> | </pre> | ||

| − | + | At a physical level there are actually five units of 10GE and 40GE NICs per DUT made by different vendors: Intel 2p10GE NICs (x520, x710), Intel 40GE NICs (xl710), Cisco 2p10GE VICs, Cisco 2p40GE VICs. During test execution, all nodes are reachable thru the MGMT network connected to every node via dedicated NICs and links (not shown above for clarity). Currently the performance tests only utilize one model of Intel NICs. | |

| + | Because performance testing is run on physical test beds and some tests require a long time to complete, the performance test jobs have been split into short duration and long duration variants. The long jobs run all of the long performance test suites discovering the throughput rates, and are run on a periodic basis. The short jobs run the short performance test suites verifying thrughput against the reference rates, and are intended to be run against all VPP patches (although this is not currently enabled). There are also separate test suites for each NIC type. | ||

| − | + | The following performance test suites are included in the CSIT-16.06 Release: | |

| − | Intel X520-DA2 (10 GbE) | + | * Long performance test suites with Intel X520-DA2 (10 GbE): |

| − | + | *# '''L2 Cross-Connect:''' NDR & PDR for forwarding of untagged and QinQ 801.2Q Vlan tagged packets. | |

| − | * Bridge Domain: L2 Bridge Domain forwarding | + | *# '''L2 Bridge-Domain:''' NDR & PDR for L2 Bridge Domain forwarding. |

| − | * | + | *# '''IPv4:''' NDR & PDR for IPv4 routing. |

| − | + | *# '''IPv6:''' NDR & PDR for IPv6 routing. | |

| − | * IPv6: IPv6 | + | *# '''COP Address Security: '''NDR & PDR for IPv4 and IPv6 with COP security. |

| − | * | + | * Short performance test suites with Intel X520-DA2 (10 GbE): |

| − | + | *# '''L2 Cross-Connect:''' untagged and QinQ 801.2Q Vlans. | |

| − | Intel X520-DA2 (10 GbE) | + | *# '''L2 Bridge-Domain:''' L2 Bridge Domain forwarding. |

| − | + | *# '''IPv4: '''IPv4 routing. | |

| − | * Bridge Domain: | + | *# '''IPv6:''' IPv6 routing. |

| − | * | + | *# '''COP Address Security: '''IPv4 and IPv6 with COP security. |

| − | + | ||

| − | * IPv6: | + | |

| − | * | + | |

==Functional tests environment== | ==Functional tests environment== | ||

| − | + | CSIT functional tests are currently executed in VIRL, as mentioned above. The physical VIRL testbed infrastructure consists of three identical VIRL hosts, each host being a Cisco UCS C240-M4 (2x Intel(R) Xeon(R) CPU E5-2699 v3 @ 2.30GHz, 18c, 512GB RAM) running Ubuntu 14.04.3 and the following VIRL software versions: | |

STD server version 0.10.24.7 | STD server version 0.10.24.7 | ||

| Line 90: | Line 87: | ||

Current VPP 16.06 tests have been executed on a single VM operating system and version only, as described in the following paragraphs. | Current VPP 16.06 tests have been executed on a single VM operating system and version only, as described in the following paragraphs. | ||

| − | In order to | + | In order to enable future testing with different Operating Systems, or with different versions of the same Operating System, and simultaneously allowing others to reproduce tests in the exact same environment, CSIT has established a process where a candidate Operating System (currently only Ubuntu 14.04.4 LTS) plus all required packages are installed, and the versions of all installed packages are recorded. A separate tool then creates, and will continue to create at any point in the future, a disk image with these packages and their exact versions. Identical sets of disk images are created in QEMU/QCOW2 format for use within VIRL, and in VirtualBox format for use in the CSIT Vagrant environment. |

In CSIT terminology, the VM operating system for both SUTs and TG that VPP 16.06 has been tested with, is the following: | In CSIT terminology, the VM operating system for both SUTs and TG that VPP 16.06 has been tested with, is the following: | ||

| Line 105: | Line 102: | ||

https://atlas.hashicorp.com/fdio-csit/boxes/ubuntu-14.04.4_2016-05-25_1.0 | https://atlas.hashicorp.com/fdio-csit/boxes/ubuntu-14.04.4_2016-05-25_1.0 | ||

| − | |||

In addition to this "main" VM image, tests which require VPP to communicate to a VM over a vhost-user interface, utilize a "nested" VM image. | In addition to this "main" VM image, tests which require VPP to communicate to a VM over a vhost-user interface, utilize a "nested" VM image. | ||

| Line 114: | Line 110: | ||

Functional tests utilize Scapy version 2.3.1 as a traffic generator. | Functional tests utilize Scapy version 2.3.1 as a traffic generator. | ||

| − | |||

==Performance tests environment== | ==Performance tests environment== | ||

| − | To execute performance tests, there are three identical testbeds | + | To execute performance tests, there are three identical testbeds, each testbed consists of two SUTs and one TG. |

| − | Hardware details (CPU, memory, NIC layout) are described | + | Hardware details (CPU, memory, NIC layout) are described in [[CSIT/CSIT_LF_testbed]]; in summary: |

| − | * All hosts are Cisco UCS C240-M4 ( | + | * All hosts are Cisco UCS C240-M4 (2x Intel(R) Xeon(R) CPU E5-2699 v3 @ 2.30GHz, 18c, 512GB RAM), |

* BIOS settings are default except for the following: | * BIOS settings are default except for the following: | ||

** Hyperthreading disabled, | ** Hyperthreading disabled, | ||

| Line 142: | Line 137: | ||

* SUT2 X520, PCI address 0000:0a:00.0 <-> TG X710, PCI address 0000:05:00.1 | * SUT2 X520, PCI address 0000:0a:00.0 <-> TG X710, PCI address 0000:05:00.1 | ||

| − | ===Config | + | ===Config: VPP (DUT)=== |

| − | + | '''NUMA Node Location of VPP Interfaces''' | |

pci_bus 0000:00: on NUMA node 0 | pci_bus 0000:00: on NUMA node 0 | ||

| − | + | '''NIC types''' | |

0a:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01) | 0a:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01) | ||

| Line 155: | Line 150: | ||

Subsystem: Intel Corporation Ethernet Server Adapter X520-2 | Subsystem: Intel Corporation Ethernet Server Adapter X520-2 | ||

| − | + | '''VPP Version''' | |

vpp-16.06_amd64 | vpp-16.06_amd64 | ||

| − | + | '''VPP Compile Parameters''' | |

VPP Compile Job: https://jenkins.fd.io/view/vpp/job/vpp-merge-master-ubuntu1404/ | VPP Compile Job: https://jenkins.fd.io/view/vpp/job/vpp-merge-master-ubuntu1404/ | ||

| − | + | '''VPP Build Script''' | |

VPP Compile Job: https://jenkins.fd.io/view/vpp/job/vpp-merge-master-ubuntu1404/ | VPP Compile Job: https://jenkins.fd.io/view/vpp/job/vpp-merge-master-ubuntu1404/ | ||

| − | + | '''VPP Install Parameters''' | |

$ dpkg -i --force-all | $ dpkg -i --force-all | ||

| − | + | '''VPP Startup Configuration''' | |

| − | VPP startup configuration | + | VPP startup configuration changes per test case with different settings for CPU cores, rx-queues and no-multi-seg parameter. Startup config is aligned with applied test case tag: |

| − | Tagged by: '' | + | Tagged by: ''1_THREAD_NOHTT_RSS_1'' |

$ cat /etc/vpp/startup.conf | $ cat /etc/vpp/startup.conf | ||

| Line 195: | Line 190: | ||

dpdk { | dpdk { | ||

socket-mem 1024,1024 | socket-mem 1024,1024 | ||

| − | + | rss 1 | |

| − | + | ||

| − | + | ||

dev 0000:0a:00.1 | dev 0000:0a:00.1 | ||

dev 0000:0a:00.0 | dev 0000:0a:00.0 | ||

} | } | ||

| − | Tagged by: '' | + | Tagged by: ''2_THREAD_NOHTT_RSS_1'' |

$ cat /etc/vpp/startup.conf | $ cat /etc/vpp/startup.conf | ||

| Line 222: | Line 215: | ||

dpdk { | dpdk { | ||

socket-mem 1024,1024 | socket-mem 1024,1024 | ||

| − | + | rss 1 | |

| − | + | ||

| − | + | ||

dev 0000:0a:00.1 | dev 0000:0a:00.1 | ||

dev 0000:0a:00.0 | dev 0000:0a:00.0 | ||

} | } | ||

| − | Tagged by: '' | + | Tagged by: ''4_THREAD_NOHTT_RSS_2'' |

$ cat /etc/vpp/startup.conf | $ cat /etc/vpp/startup.conf | ||

| Line 249: | Line 240: | ||

dpdk { | dpdk { | ||

socket-mem 1024,1024 | socket-mem 1024,1024 | ||

| − | + | rss 2 | |

| − | + | ||

| − | + | ||

dev 0000:0a:00.1 | dev 0000:0a:00.1 | ||

dev 0000:0a:00.0 | dev 0000:0a:00.0 | ||

| Line 257: | Line 246: | ||

| − | ===Config | + | ===Config: Traffic Generator - T-Rex=== |

| − | + | '''TG Version''' | |

T-rex v2.03 | T-rex v2.03 | ||

| − | + | '''DPDK version''' | |

DPDK v2.2.0 (a38e5ec15e3fe615b94f3cc5edca5974dab325ab - DPDK repository) | DPDK v2.2.0 (a38e5ec15e3fe615b94f3cc5edca5974dab325ab - DPDK repository) | ||

| − | + | '''TG Build Script used''' | |

https://gerrit.fd.io/r/gitweb?p=csit.git;a=blob_plain;f=resources/tools/t-rex/t-rex-installer.sh;hb=HEAD | https://gerrit.fd.io/r/gitweb?p=csit.git;a=blob_plain;f=resources/tools/t-rex/t-rex-installer.sh;hb=HEAD | ||

| − | + | '''TG Startup Configuration''' | |

$ cat /etc/trex_cfg.yaml | $ cat /etc/trex_cfg.yaml | ||

| Line 284: | Line 273: | ||

src_mac : [0x3c,0xfd,0xfe,0x9c,0xee,0xf5] | src_mac : [0x3c,0xfd,0xfe,0x9c,0xee,0xf5] | ||

| − | + | '''TG common API - pointer to driver''' | |

https://gerrit.fd.io/r/gitweb?p=csit.git;a=blob_plain;f=resources/tools/t-rex/t-rex-stateless.py;hb=HEAD | https://gerrit.fd.io/r/gitweb?p=csit.git;a=blob_plain;f=resources/tools/t-rex/t-rex-stateless.py;hb=HEAD | ||

| − | |||

| − | |||

==Functional tests results== | ==Functional tests results== | ||

| − | + | Functional test results in this report have been generated from Robot Framework output log [https://jenkins.fd.io/view/csit/job/csit-vpp-functional-1606-virl/ csit-vpp-functional-1606-virl] from [https://jenkins.fd.io/view/csit/job/csit-vpp-functional-1606-virl/lastSuccessfulBuild/artifact/log.html log.html] [https://jenkins.fd.io/view/csit/job/csit-vpp-functional-1606-virl/lastSuccessfulBuild/artifact/output.xml output.xml] [https://jenkins.fd.io/view/csit/job/csit-vpp-functional-1606-virl/lastSuccessfulBuild/artifact/report.html report.html], and post processed into LF media wiki format. | |

| − | ===Bridge Domain=== | + | ===L2 Bridge-Domain=== |

| − | ====Bridge Domain Untagged==== | + | ====L2 Bridge-Domain Untagged==== |

| − | '''L2 bridge-domain test cases''' - verify VPP DUT L2 MAC-based switching: | + | '''L2 bridge-domain test cases''' - verify VPP DUT L2 MAC-based bridge-domain based switching: |

| − | * '''[Top] | + | * '''[Top]''' Network Topologies: TG=DUT1 2-node topology with two links between nodes; TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes; TG=DUT1=DUT2=TG 3-node circular topology with double parallel links and TG=DUT=VM 3-node topology with VM and double parallel links. |

| − | * '''[Enc] | + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4-ICMPv4 for L2 switching of IPv4; Eth-IPv6-ICMPv6 for L2 switching of IPv6 use. Both apply to all links. |

| − | * '''[Cfg] | + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with L2 bridge-domain (L2BD) switching combined with static MACs; MAC learning enabled and Split Horizon Groups (SHG) depending on test case. |

| − | * '''[Ver] | + | * '''[Ver]''' TG verification: Test ICMPv4 (or ICMPv6) Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies packets for correctness and their IPv4 (IPv6) src-addr, dst-addr and MAC addresses. |

| − | * '''[Ref] Applicable standard specifications: | + | * '''[Ref]''' Applicable standard specifications: |

{| class="wikitable" | {| class="wikitable" | ||

| Line 350: | Line 337: | ||

|PASS | |PASS | ||

|} | |} | ||

| − | === | + | |

| − | ==== | + | ===L2 Cross-Connect=== |

| + | ====L2 Cross-Connect Untagged==== | ||

| + | '''L2 cross-connect test cases''' - verify VPP DUT point-to-point L2 cross-connect switching: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes; TG=DUT1=DUT2=TG 3-node circular topology with double parallel links and TG=DUT=VM 3-node topology with VM and double parallel links. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4-ICMPv4 for L2 switching of IPv4; Eth-IPv6-ICMPv6 for L2 switching of IPv6 use. Both apply to all links. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with L2 cross-connect (L2XC) switching. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 (or ICMPv6) Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies packets for correctness and their IPv4 (IPv6) src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT1 and DUT2 with L2XC switch ICMPv4 between two TG links | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 and DUT2 configure L2 cross-connect (L2XC), each with one interface to TG and one Ethernet interface towards the other DUT. [Ver] Make TG send ICMPv4 Echo Req in both directions between two of its interfaces to be switched by DUT1 and DUT2; verify all packets are received. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT1 and DUT2 with L2XC switch ICMPv6 between two TG links | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 and DUT2 configure L2 cross-connect (L2XC), each with one interface to TG and one Ethernet interface towards the other DUT. [Ver] Make TG send ICMPv6 Echo Req in both directions between two of its interfaces to be switched by DUT1 and DUT2; verify all packets are received. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT with two L2XCs switches ICMPv4 between TG and local VM links | ||

| + | |[Top] TG=DUT=VM. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT configure two L2 cross-connects (L2XC), each with one untagged interface to TG and untagged i/f to local VM over vhost-user. [Ver] Make TG send ICMPv4 Echo Reqs in both directions between two of its i/fs to be switched by DUT to and from VM; verify all packets are received. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC04: DUT with two L2XCs switches ICMPv6 between TG and local VM links | ||

| + | |[Top] TG=DUT=VM. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT configure two L2 cross-connects (L2XC), each with one untagged i/f to TG and untagged i/f to local VM over vhost-user. [Ver] Make TG send ICMPv6 Echo Reqs in both directions between two of its i/fs to be switched by DUT to and from VM; verify all packets are received. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ===Tagging=== | ||

| + | ====L2 Cross-Connect with QinQ==== | ||

| + | '''L2 cross-connect with QinQ test cases''' - verify VPP DUT point-to-point L2 cross-connect switching with QinQ 802.1ad tagged interfaces: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet encapsulations: Eth-dot1ad-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn for L2 switching of IPv4. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with L2 cross-connect (L2XC) switching with 802.1ad QinQ VLAN tag push and pop. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies packets for correctness and their IPv4 src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: 802dot1ad. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT1 and DUT2 with L2XC and two VLAN push-pop switch ICMPv4 between two TG links | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-dot1ad-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure L2 cross-connect (L2XC), each with one interface to TG and one Ethernet interface towards the other DUT; each DUT pushes two VLAN tags on packets received from local TG, and popping two VLAN tags on packets transmitted to local TG. [Ver] Make TG send ICMPv4 Echo Req in both directions between two of its interfaces to be switched by DUT1 and DUT2; verify all packets are received. [Ref] 802dot1ad. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ===VXLAN=== | ||

| + | ====VXLAN Bridge-Domain Untagged==== | ||

| + | '''RFC7348 VXLAN: Bridge-domain with VXLAN test cases''' - verify VPP DUT L2 MAC-based bridge-domain based switching integration with VXLAN tunnels: | ||

| + | * '''[Top]''' Network topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes; TG=DUT1=DUT2=TG 3-node circular topology with double parallel links. | ||

| + | * '''[Enc]''' Packet encapsulations: Eth-IPv4-VXLAN-Eth-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn for L2 switching of IPv4; Eth-IPv6-VXLAN-Eth-IPv6-ICMPv6 on DUT1-DUT2, Eth-IPv6-ICMPv6 on TG-DUTn for L2 switching of IPv6. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with L2 bridge-domain (L2BD) switching combined with static MACs, MAC learning enabled and Split Horizon Groups (SHG) depending on test case; VXLAN tunnels are configured between L2BDs on DUT1 and DUT2. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 (or ICMPv6) Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies packets for correctness and their IPv4 (IPv6) src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC7348. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT1 and DUT2 with L2BD and VXLANoIPv4 tunnels switch ICMPv4 between TG links | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-VXLAN-Eth-IPv4-ICMPv4 on DUT1-DUT2; Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure two i/fs into L2BD with MAC learning. [Ver] Make TG verify ICMPv4 Echo Req pkts are switched thru DUT1 and DUT2 in both directions and are correct on receive. [Ref] RFC7348. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT1 and DUT2 with L2BD and VXLANoIPv4 tunnels in SHG switch ICMPv4 between TG links | ||

| + | |[Top] TG=DUT1=DUT2=TG. [Enc] Eth-IPv4-VXLAN-Eth-IPv4-ICMPv4 on DUT1-DUT2; Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg] On DUT1 configure L2 bridge-domain (MAC learning enabled) with two untagged interfaces to TG and two VXLAN interfaces towards the DUT2 and put both VXLAN interfaces into the same Split-Horizon-Group (SHG). On DUT2 configure two L2 bridge-domain (MAC learning enabled), each with one untagged interface to TG and one VXLAN interface towards the DUT1. [Ver] Make TG send ICMPv4 Echo Reqs between all four of its interfaces to be switched by DUT1 and DUT2; verify packets are not switched between TG interfaces connected to DUT2 that are isolated by SHG on DUT1. [Ref] RFC7348. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT1 and DUT2 with L2BD and VXLANoIPv4 tunnels in different SHGs switch ICMPv4 between TG links | ||

| + | |[Top] TG=DUT1=DUT2=TG.[Enc] Eth-IPv4-VXLAN-Eth-IPv4-ICMPv4 on DUT1-DUT2; Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg] On DUT1 configure L2 bridge-domain (MAC learning enabled) with two untagged interfaces to TG and two VXLAN interfaces towards the DUT2 and put both VXLAN interfaces into the different Split-Horizon-Group (SHGs). On DUT2 configure two L2 bridge-domain (MAC learning enabled), each with one untagged interface to TG and one VXLAN interface towards the DUT1. [Ver] Make TG send ICMPv4 Echo Req between all four of its interfaces to be switched by DUT1 and DUT2; verify packets are switched between all TG interfaces. [Ref] RFC7348. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC04: DUT1 and DUT2 with L2BD and VXLANoIPv6 tunnels switch ICMPv6 between TG links | ||

| + | |[Top] TG=DUT1=DUT2=TG.[Enc] Eth-IPv6-VXLAN-Eth-IPv6-ICMPv6 on DUT1-DUT2, Eth-IPv6-ICMPv6 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure L2 bridge-domain (MAC learning enabled), each with one interface to TG and one VXLAN tunnel interface towards the other DUT. [Ver] Make TG send ICMPv6 Echo Req between two of its interfaces to be switched by DUT1 and DUT2; verify all packets are received. [Ref] RFC7348. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC05: DUT1 and DUT2 with L2BD and VXLANoIPv6 tunnels in SHG switch ICMPv6 between TG links | ||

| + | |[Top] TG=DUT1=DUT2=TG. [Enc] Eth-IPv6-VXLAN-Eth-IPv6-ICMPv6 on DUT1-DUT2; Eth-IPv6-ICMPv6 on TG-DUTn. [Cfg] On DUT1 configure L2 bridge-domain (MAC learning enabled) with two untagged interfaces to TG and two VXLAN interfaces towards the DUT2 and put both VXLAN interfaces into the same Split-Horizon-Group (SHG). On DUT2 configure two L2 bridge-domain (MAC learning enabled), each with one untagged interface to TG and one VXLAN interface towards the DUT1. [Ver] Make TG send ICMPv6 Echo Reqs between all four of its interfaces to be switched by DUT1 and DUT2; verify packets are not switched between TG interfaces connected to DUT2 that are isolated by SHG on DUT1. [Ref] RFC7348. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC06: DUT1 and DUT2 with L2BD and VXLANoIPv6 tunnels in different SHGs switch ICMPv6 between TG links | ||

| + | |[Top] TG=DUT1=DUT2=TG.[Enc] Eth-IPv6-VXLAN-Eth-IPv6-ICMPv6 on DUT1-DUT2; Eth-IPv6-ICMPv6 on TG-DUTn. [Cfg] On DUT1 configure L2 bridge-domain (MAC learning enabled) with two untagged interfaces to TG and two VXLAN interfaces towards the DUT2 and put both VXLAN interfaces into the different Split-Horizon-Group (SHGs). On DUT2 configure two L2 bridge-domain (MAC learning enabled), each with one untagged interface to TG and one VXLAN interface towards the DUT1. [Ver] Make TG send ICMPv6 Echo Req between all four of its interfaces to be switched by DUT1 and DUT2; verify packets are switched between all TG interfaces. [Ref] RFC7348. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ====VXLAN L2 Bridge-Domain with dot1q==== | ||

| + | '''RFC7348 VXLAN: Bridge-domain with VXLAN over VLAN test cases''' - verify VPP DUT L2 MAC-based bridge-domain based switching integration with VXLAN tunnels over 802dot1q VLANs: | ||

| + | * '''[Top]''' Network topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet encapsulations: Eth-dot1q-IPv4-VXLAN-Eth-IPv4-ICMPv4 on DUT1-DUT2, Eth-dot1q-IPv4-ICMPv4 on TG-DUTn for L2 switching of IPv4. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with L2 bridge-domain (L2BD) switching combined with static MACs, MAC learning enabled and Split Horizon Groups (SHG) depending on test case; VXLAN tunnels are configured between L2BDs on DUT1 and DUT2. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies packets for correctness and their IPv4 src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC7348. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT1 and DUT2 with L2BD and VXLANoIPv4oVLAN tunnels switch ICMPv4 between TG links | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-dot1q-IPv4-VXLAN-Eth-IPv4-ICMPv4 on DUT1-DUT2; Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure L2 bridge-domain (MAC learning enabled), each with one interface to TG and one VXLAN tunnel interface towards the other DUT over VLAN sub-interface. [Ver] Make TG send ICMPv4 Echo Req between two of its interfaces, verify all packets are received. [Ref] RFC7348. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ====VXLAN L2 Cross-Connect Untagged==== | ||

| + | '''RFC7348 VXLAN: L2 cross-connect with VXLAN test cases''' - verify VPP DUT point-to-point L2 cross-connect switching integration with VXLAN tunnels: | ||

| + | * '''[Top]''' Network topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet encapsulations: Eth-IPv4-VXLAN-Eth-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn for L2 switching of IPv4. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with L2 cross-connect (L2XC) switching; VXLAN tunnels are configured between L2XCs on DUT1 and DUT2. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies packets for correctness and their IPv4 src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC7348. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT1 and DUT2 with L2XC and VXLANoIPv4 tunnels switch ICMPv4 between TG links | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-VXLAN-Eth-IPv4-ICMPv4 on [Ref] RFC7348.DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure L2 cross-connect (L2XC), each with one interface to TG and one VXLAN tunnel interface towards the other DUT. [Ver] Make TG send ICMPv4 Echo Req between two of its interfaces; verify all packets are received. [Ref] RFC7348. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ===IPv4 Routing=== | ||

| + | ====IPv4 ARP Untagged==== | ||

| + | '''IPv4 ARP test cases''' - verify VPP DUT ARP functionality: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4 and Eth-ARP on all links. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with IPv4 routing and static routes. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies ARP packets for correctness. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC826 ARP. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT sends ARP Request for unresolved locally connected IPv4 address | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4 and Eth-ARP. [Cfg] On DUT1 configure interface IPv4 addresses and routes in the main routing domain. [Ver] Make TG send test packet destined to IPv4 address of its other interface connected to DUT2; verify DUT2 sends ARP Request for locally connected TG IPv4 address. [Ref] RFC826. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ====IPv4 Routing Untagged==== | ||

| + | '''IPv4 routing test cases''' - verify baseline IPv4 routing: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4-ICMPv4 and Eth-ARP on all links. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with IPv4 routing and static routes. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies packets IPv4 src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC791 IPv4, RFC826 ARP, RFC792 ICMPv4. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT replies to ICMPv4 Echo Req to its ingress interface | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4 and Eth-ARP. [Cfg] On DUT1 configure interface IPv4 addresses and static routes. [Ver] Make TG send ICMPv4 Echo Req to DUT1 ingress interface; verify ICMP Echo Reply is correct. [Ref] RFC791, RFC826, RFC792. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT routes IPv4 to its egress interface | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4 and Eth-ARP. [Cfg] On DUT1 configure interface IPv4 addresses and static routes. [Ver] Make TG send ICMPv4 Echo Req towards DUT1 egress interface connected to DUT2; verify ICMPv4 Echo Reply is correct. [Ref] RFC791, RFC826, RFC792. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT1 routes IPv4 to DUT2 ingress interface | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4 and Eth-ARP. [Cfg] On DUT1 configure interface IPv4 addresses and static routes. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify ICMPv4 Echo Reply is correct. [Ref] RFC791, RFC826, RFC792. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC04: DUT1 routes IPv4 to DUT2 egress interface | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4 and Eth-ARP. [Cfg] On DUT1 configure interface IPv4 addresses and static routes. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 egress interface connected to TG; verify ICMPv4 Echo Reply is correct. [Ref] RFC791, RFC826, RFC792. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC05: DUT1 and DUT2 route IPv4 between TG interfaces | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4 and Eth-ARP. [Cfg] On DUT1 configure interface IPv4 addresses and static routes. [Ver] Make TG send ICMPv4 Echo Req between its interfaces across DUT1 and DUT2; verify ICMPv4 Echo Reply is correct. [Ref] RFC791, RFC826, RFC792. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC06: DUT replies to ICMPv4 Echo Reqs with size 64B-to-1500B-incr-1B | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4 and Eth-ARP. [Cfg] On DUT1 configure interface IPv4 addresses and static routes. [Ver] Make TG send ICMPv4 Echo Reqs to DUT1 ingress interface, incrementating frame size from 64B to 1500B with increment step of 1Byte; verify ICMPv4 Echo Replies are correct. [Ref] RFC791, RFC826, RFC792. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC07: DUT replies to ARP request | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-ARP. [Cfg] On DUT1 configure interface IPv4 addresses and static routes. [Ver] Make TG send ARP Request to DUT; verify ARP Reply is correct. [Ref] RFC826. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ====IPv4 Routing ingress ACL Untagged==== | ||

| + | ''' IPv4 routing with ingress ACL test cases''' - verify VPP DUT ingress Access Control Lists filtering with IPv4 routing: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4-ICMPv4 and Eth-ARP on all links. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with IPv4 routing and static routes. DUT1 is configured with iACL on link to TG, iACL classification and permit/deny action are configured on a per test case basis. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 Echo Request packets are sent in one direction by TG on link to DUT1 and received on TG link to DUT2. On receive TG verifies if packets are dropped, or if received verifies packet IPv4 src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT with iACL IPv4 src-addr drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add source IPv4 address to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT with iACL IPv4 dst-addr drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add destination IPv4 address to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT with iACL IPv4 src-addr and dst-addr drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add source and destination IPv4 addresses to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC04: DUT with iACL IPv4 protocol set to TCP drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add protocol mask and TCP protocol (0x06) to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC05: DUT with iACL IPv4 protocol set to UDP drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add protocol mask and UDP protocol (0x11) to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC06: DUT with iACL IPv4 TCP src-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add TCP source ports to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC07: DUT with iACL IPv4 TCP dst-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add TCP destination ports to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC08: DUT with iACL IPv4 TCP src-ports and dst-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add TCP source and destination ports to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC09: DUT with iACL IPv4 UDP src-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add protocol mask and TCP protocol (0x06) to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC10: DUT with iACL IPv4 UDP dst-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add TCP destination ports to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC11: DUT with iACL IPv4 UDP src-ports and dst-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add UDP source and destination ports to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC12: DUT with iACL MAC src-addr drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-ICMPv4. [Cfg] On DUT1 configure interface IPv4 addresses and static routes; on DUT1 add source MAC address to classify table with 'deny'. [Ver] Make TG send ICMPv4 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ===DHCPv4=== | ||

| + | ====DHCPv4 Client==== | ||

| + | '''DHCPv4 client test cases''' - verify VPP DUT DHCPv4 client functionality: | ||

| + | * '''[Top]''' Network Topologies: TG=DUT1 2-node topology with two links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4-DHCPv4 on all links. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 is configured with DHCPv4 client. | ||

| + | * '''[Ver]''' TG verification: Test DHCPv4 packets are sent by TG on link to DUT1. TG verifies any received DHCPv4 packets for their correctness. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC2132. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT sends a DHCPv4 DISCOVER | ||

| + | |[Top] TG=DUT1 [Enc] Eth-IPv4-DHCPv4 [Cfg] Configure DHCP client on DUT1 interface to TG without hostname. [Ver] Make TG check if DHCP DISCOVER message contains all required fields with expected values. [Ref] RFC2132. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT sends a DHCP DISCOVER with hostname | ||

| + | |[Top] TG=DUT1 [Enc] Eth-IPv4-DHCPv4 [Cfg] Configure DHCP client on DUT1 interface to TG with hostname. [Ver] Make TG check if DHCP DISCOVER message contains all required fields with expected values. [Ref] RFC2132. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT sends DHCP REQUEST after OFFER | ||

| + | |[Top] TG=DUT1 [Enc] Eth-IPv4-DHCPv4 [Cfg] Configure DHCP client on DUT1 interface to TG with hostname. [Ver] Make TG check if DHCP REQUEST message contains all required fields with expected values. [Ref] RFC2132. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC04: DUT honors DHCPv4 lease time | ||

| + | |[Top] TG=DUT1 [Enc] Eth-IPv4-DHCPv4 [Cfg] TG sends IP configuration over DHCPv4 to DHCP client on DUT1 interface. [Ver] Make TG check if ICMPv4 Echo Request is replied to, and that DUT stops replying after lease has expired. [Ref] RFC2132. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ===IPv6 Routing=== | ||

| + | ====IPv6 Router Advertisement==== | ||

| + | '''IPv6 Router Advertisement test cases''' - verify VPP DUT IPv6 Router Advertisement functionality: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv6-RA on TG-DUT1 link. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with IPv6 routing and static routes. | ||

| + | * '''[Ver]''' TG verification: TG verifies periodically received RA packets. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC4861 Neighbor Discovery. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT transmits RA on IPv6 enabled interface | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-RA. [Cfg] On DUT1 configure IPv6 interface on the link to TG. [Ver] Make TG wait for IPv6 Router Advertisement packet to be sent out by DUT1; verify the received RA packet is correct. [Ref] RFC826. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | ====IPv6 Routing Untagged==== | ||

| + | '''IPv6 routing test cases''' - verify baseline IPv6 routing: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv6-ICMPv4 and Eth-IPv6-NS/NA on all links. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with IPv6 routing and static routes. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies packets IPv6 src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC2460 IPv6, RFC4443 ICMPv6, RFC4861 Neighbor Discovery. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT replies to ICMPv6 Echo Req to its ingress interface | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6 and Eth-IPv6-NS/NA. [Cfg] On DUT1 configure interface IPv6 addresses and static routes. [Ver] Make TG send ICMPv6 Echo Req to DUT1 ingress interface; verify ICMP Echo Reply is correct. [Ref] RFC2460, RFC4443, RFC4861. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT routes IPv6 to its egress interface | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6 and Eth-IPv6-NS/NA. [Cfg] On DUT1 configure interface IPv6 addresses and static routes. [Ver] Make TG send ICMPv6 Echo Req towards DUT1 egress interface connected to DUT2; verify ICMPv6 Echo Reply is correct. [Ref] RFC2460, RFC4443, RFC4861. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT1 routes IPv6 to DUT2 ingress interface | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6 and Eth-IPv6-NS/NA. [Cfg] On DUT1 configure interface IPv6 addresses and static routes. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify ICMPv6 Echo Reply is correct. [Ref] RFC2460, RFC4443, RFC4861. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC04: DUT1 routes IPv6 to DUT2 egress interface | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6 and Eth-IPv6-NS/NA. [Cfg] On DUT1 configure interface IPv6 addresses and static routes. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 egress interface connected to TG; verify ICMPv6 Echo Reply is correct. [Ref] RFC2460, RFC4443, RFC4861. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC05: DUT1 and DUT2 route IPv6 between TG interfaces | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6 and Eth-IPv6-NS/NA. [Cfg] On DUT1 configure interface IPv6 addresses and static routes. [Ver] Make TG send ICMPv6 Echo Req between its interfaces across DUT1 and DUT2; verify ICMPv6 Echo Reply is correct. [Ref] RFC2460, RFC4443, RFC4861. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC06: DUT replies to ICMPv6 Echo Reqs with size 64B-to-1500B-incr-1B | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6 and Eth-IPv6-NS/NA. [Cfg] On DUT1 configure interface IPv6 addresses and static routes. [Ver] Make TG send ICMPv6 Echo Reqs to DUT ingress interface, incrementating frame size from 64B to 1500B with increment step of 1Byte; verify ICMPv6 Echo Replies are correct. [Ref] RFC2460, RFC4443, RFC4861. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC07: DUT replies to ARP request | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-NS/NA. [Cfg] On DUT1 configure interface IPv6 addresses and static routes. [Ver] Make TG send Neighbor Solicitation message on the link | ||

| + | to DUT1;verify DUT 1Neighbor Advertisement reply is correct. [Ref] RFC4861. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ====IPv6 Routing with ingress ACL Untagged==== | ||

| + | ''' IPv6 routing with ingress ACL test cases''' - verify VPP DUT ingress Access Control Lists filtering with IPv6 routing: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv6-ICMPv6 and Eth-ARP on all links. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with IPv6 routing and static routes. DUT1 is configured with iACL on link to TG, iACL classification and permit/deny action are configured on a per test case basis. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv6 Echo Request packets are sent in one direction by TG on link to DUT1 and received on TG link to DUT2. On receive TG verifies if packets are dropped, or if received verifies packet IPv6 src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT with iACL IPv6 src-addr drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add source IPv6 address to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT with iACL IPv6 dst-addr drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add destination IPv6 address to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT with iACL IPv6 src-addr and dst-addr drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add source and destination IPv6 addresses to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC04: DUT with iACL IPv6 protocol set to TCP drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add protocol mask and TCP protocol (0x06) to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC05: DUT with iACL IPv6 protocol set to UDP drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add protocol mask and UDP protocol (0x11) to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC06: DUT with iACL IPv6 TCP src-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add TCP source ports to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC07: DUT with iACL IPv6 TCP dst-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add TCP destination ports to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC08: DUT with iACL IPv6 TCP src-ports and dst-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add TCP source and destination ports to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC09: DUT with iACL IPv6 UDP src-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add UDP source ports to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC10: DUT with iACL IPv6 UDP dst-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add TCP destination ports to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC11: DUT with iACL IPv6 UDP src-ports and dst-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add UDP source and destination ports to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC12: DUT with iACL MAC src-addr and iACL IPv6 UDP src-ports and dst-ports drops matching pkts | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and static routes; on DUT1 add source MAC address to classify (L2) table and add UDP source and destination ports to classify table with 'deny'. [Ver] Make TG send ICMPv6 Echo Req towards DUT2 ingress interface connected to DUT1; verify matching packets are dropped. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ===COP Address Security=== | ||

| + | ====COP Whitelist Blacklist==== | ||

'''COP Security IPv4 Blacklist and Whitelist Tests''' - verify VPP DUT security IPv4 Blacklist and Whitelist filtering based on IPv4 source addresses: | '''COP Security IPv4 Blacklist and Whitelist Tests''' - verify VPP DUT security IPv4 Blacklist and Whitelist filtering based on IPv4 source addresses: | ||

| − | * '''[Top] | + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. |

| − | * '''[Enc] | + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4-ICMPv4 on all links. |

| − | * '''[Cfg] | + | * '''[Cfg]''' DUT configuration: DUT1 is configured with IPv4 routing and static routes. COP security white-lists are applied on DUT1 ingress interface from TG. DUT2 is configured with L2XC. |

| − | * '''[Ver] | + | * '''[Ver]''' TG verification: Test ICMPv4 Echo Request packets are sent in one direction by TG on link to DUT1; on receive TG verifies packets for correctness and drops as applicable. |

| − | * '''[Ref] Applicable standard specifications: | + | * '''[Ref]''' Applicable standard specifications: |

| + | |||

{| class="wikitable" | {| class="wikitable" | ||

!Name!!Documentation!!Message!!Status | !Name!!Documentation!!Message!!Status | ||

| Line 371: | Line 795: | ||

|PASS | |PASS | ||

|} | |} | ||

| + | |||

| + | ====COP Whitelist Blacklist IPv6==== | ||

| + | '''COP Security IPv6 Blacklist and Whitelist Tests''' - verify VPP DUT security IPv6 Blacklist and Whitelist filtering based on IPv6 source addresses: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv6-ICMPv6 on all links. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 is configured with IPv6 routing and static routes. COP security white-lists are applied on DUT1 ingress interface from TG. DUT2 is configured with L2XC. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv6 Echo Request packets are sent in one direction by TG on link to DUT1; on receive TG verifies packets for correctness and drops as applicable. | ||

| + | * '''[Ref]''' Applicable standard specifications: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT permits IPv6 pkts with COP whitelist set with IPv6 src-addr | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and routes in the main routing domain, add COP whitelist on interface to TG with IPv6 src-addr matching packets generated by TG; on DUT2 configure L2 xconnect. [Ver] Make TG send ICMPv6 Echo Req on its interface to DUT1; verify received ICMPv6 Echo Req pkts are correct. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT drops IPv6 pkts with COP blacklist set with IPv6 src-addr | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-ICMPv6. [Cfg] On DUT1 configure interface IPv6 addresses and routes in the main routing domain, add COP blacklist on interface to TG with IPv6 src-addr matching packets generated by TG; on DUT2 configure L2 xconnect. [Ver] Make TG send ICMPv6 Echo Req on its interface to DUT1; verify no ICMPv6 Echo Req pkts are received. [Ref] | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ===GRE Tunnel=== | ||

| + | ====GREoIPv4 Encapsulation==== | ||

| + | '''GREoIPv4 test cases''' - verify VPP DUT GREoIPv4 tunnel encapsulation and routing thru the tunnel: | ||

| + | * '''[Top]''' Network Topologies: TG=DUT1 2-node topology with two links between nodes; TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4-GRE-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn for routing over GRE tunnel; Eth-IPv4-ICMPv4 on TG_if1-DUT, Eth-IPv4-GRE-IPv4-ICMPv4 on TG_if2_DUT for GREoIPv4 encapsulation and decapsulation verification. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with IPv4 routing and static routes. GREoIPv4 tunnel is configured between DUT1 and DUT2. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 (or ICMPv6) Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; GREoIPv4 encapsulation and decapsulation are verified separately by TG; on receive TG verifies packets for correctness and their IPv4 (IPv6) src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC2784. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT1 and DUT2 route over GREoIPv4 tunnel between two TG links | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-GRE-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure GREoIPv4 tunnel with IPv4 routes towards each other. [Ver] Make TG send ICMPv4 Echo Req between its interfaces across both DUTs and GRE tunnel between them; verify IPv4 headers on received packets are correct. [Ref] RFC2784. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT encapsulates IPv4 into GREoIPv4 tunnel - GRE header verification | ||

| + | |[Top] TG=DUT1. [Enc] Eth-IPv4-ICMPv4 on TG_if1-DUT, Eth-IPv4-GRE-IPv4-ICMPv4 on TG_if2_DUT. [Cfg] On DUT1 configure GREoIPv4 tunnel with IPv4 route towards TG. [Ver] Make TG send non-encapsulated ICMPv4 Echo Req to DUT; verify TG received GREoIPv4 encapsulated packet is correct. [Ref] RFC2784. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT decapsulates IPv4 from GREoIPv4 tunnel - IPv4 header verification | ||

| + | |[Top] TG=DUT1. [Enc] Eth-IPv4-ICMPv4 on TG_if1-DUT, Eth-IPv4-GRE-IPv4-ICMPv4 on TG_if2_DUT. [Cfg] On DUT1 configure GREoIPv4 tunnel towards TG. [Ver] Make TG send ICMPv4 Echo Req encapsulated into GREoIPv4 towards VPP; verify TG received IPv4 de-encapsulated packet is correct. [Ref] RFC2784. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ===LISP=== | ||

| + | ====LISP API==== | ||

| + | '''LISP API test cases''' - verify VPP DUT LISP API functionality: | ||

| + | * '''[Top]''' Network Topologies: DUT1 1-node topology. | ||

| + | * '''[Enc]''' Packet Encapsulations: None. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 gets configured with all LISP | ||

| + | * '''arame'''ters. | ||

| + | * '''[Ver]''' Verification: DUT1 operational data gets verified following | ||

| + | * '''onfig'''uration. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC6830. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT can enable and disable LISP | ||

| + | |[Top] DUT1. [Enc] None. [Cfg1] Test LISP enable/disable API; On DUT1 enable LISP. [Ver1] Check DUT1 if LISP is enabled. [Cfg2] Then disable LISP. [Ver2] Check DUT1 if LISP is disabled. [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT can add and delete locator_set | ||

| + | |[Top] DUT1. [Enc] None. [Cfg1] Test LISP locator_set API; on DUT1 configure locator_set and locator. [Ver1] Check DUT1 configured locator_set and locator are correct. [Cfg2] Then remove locator_set and locator. [Ver2] check DUT1 locator_set and locator are removed. [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT can add, reset and delete locator_set | ||

| + | |[Top] DUT1. [Enc] None. [Cfg1] Test LISP locator_set API; on DUT1 configure locator_set and locator. [Ver1] Check DUT1 locator_set and locator are correct. [Cfg2] Then reset locator_set and set it again. [Ver2] Check DUT1 locator_set and locator are correct. [Cfg3] Then remove locator_set and locator. [Ver3] Check DUT1 all locator_set and locators are removed. | ||

| + | [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC04: DUT can add and delete eid address | ||

| + | |[Top] DUT1. [Enc] None. [Cfg1] Test LISP eid API; on DUT1 configure LISP eid IP address. [Ver1] Check DUT1 configured data is correct. [Cfg2] Remove configured data. [Ver2] Check DUT1 all eid IP addresses are removed. [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC05: DUT can add and delete LISP map resolver address | ||

| + | |[Top] DUT1. [Enc] None. [Cfg1] Test LISP map resolver address API; on DUT1 configure LISP map resolver address. [Ver1] Check DUT1 configured data is correct. [Cfg2] Remove configured data. [Ver2] Check DUT1 all map resolver addresses are removed. [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | ====LISP Dataplane Untagged==== | ||

| + | '''LISP static remote mapping test cases''' - verify VPP DUT LISP data plane with static mapping functionality: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4-LISP-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn for IPv4 routing over LISPoIPv4 tunnel; Eth-IPv6-LISP-IPv6-ICMPv6 on DUT1-DUT2, Eth-IPv6-ICMPv6 on TG-DUTn for IPv6 routing over LISPoIPv6 tunnel; Eth-IPv6-LISP-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn for IPv4 routing over LISPoIPv6 tunnel; Eth-IPv4-LISP-IPv6-ICMPv6 on DUT1-DUT2, Eth-IPv6-ICMPv6 on TG-DUTn for IPv6 routing over LISPoIPv4 tunnel. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with IPv4 (IPv6) routing and static routes. LISPoIPv4 (oIPv6) tunnel is configured between DUT1 and DUT2. | ||

| + | * '''[Ver]''' TG verification: Test ICMPv4 (or ICMPv6) Echo Request packets are sent in both directions by TG on links to DUT1 and DUT2; on receive TG verifies packets for correctness and their IPv4 (IPv6) src-addr, dst-addr and MAC addresses. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC6830. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Name!!Documentation!!Message!!Status | ||

| + | |- | ||

| + | |TC01: DUT1 and DUT2 route IPv4 bidirectionally over LISPoIPv4 tunnel | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-LISP-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure IPv4 LISP remote static mappings. [Ver] Make TG send ICMPv4 Echo Req between its interfaces across both DUTs and LISP tunnel between them; verify IPv4 headers on received packets are correct. [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC02: DUT1 and DUT2 route IPv6 bidirectionally over LISPoIPv6 tunnel | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-LISP-IPv6-ICMPv6 on DUT1-DUT2, Eth-IPv6-ICMPv6 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure IPv6 LISP remote static mappings. [Ver] Make TG send ICMPv6 Echo Req between its interfaces across both DUTs and LISP tunnel between them; verify IPv4 headers on received packets are correct. [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC03: DUT1 and DUT2 route IPv4 bidirectionally over LISPoIPv6 tunnel | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv6-LISP-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure IPv6 LISP remote static mappings. [Ver] Make TG send ICMPv4 Echo Req between its interfaces across both DUTs and LISP tunnel between them; verify IPv4 headers on received packets are correct. [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC04: DUT1 and DUT2 route IPv6 bidirectionally over LISPoIPv4 tunnel | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-LISP-IPv6-ICMPv6 on DUT1-DUT2, Eth-IPv6-ICMPv6 on TG-DUTn. [Cfg] On DUT1 and DUT2 configure IPv4 LISP remote static mappings. [Ver] Make TG send ICMPv6 Echo Req between its interfaces across both DUTs and LISP tunnel between them; verify IPv4 headers on received packets are correct. [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |- | ||

| + | |TC05: DUT1 and DUT2 route IPv4 over LISPoIPv4 tunnel after disable-enable | ||

| + | |[Top] TG-DUT1-DUT2-TG. [Enc] Eth-IPv4-LISP-IPv4-ICMPv4 on DUT1-DUT2, Eth-IPv4-ICMPv4 on TG-DUTn. [Cfg1] On DUT1 and DUT2 configure IPv4 LISP remote static mappings. [Ver1] Make TG send ICMPv4 Echo Req between its interfaces across both DUTs and LISP tunnel between them; verify IPv4 headers on received packets are correct. [Cfg2] Disable LISP. [Ver2] verify packets are not received via LISP tunnel. [Cfg3] Re-enable LISP. [Ver3] verify packets are received again via LISP tunnel. [Ref] RFC6830. | ||

| + | | | ||

| + | |PASS | ||

| + | |} | ||

| + | |||

| + | |||

==Performance tests results== | ==Performance tests results== | ||

| − | |||

| − | |||

| − | === | + | CSIT VPP DUT performance test results in this report have been generated from Robot Framework output log of LF Jenkins Job vpp-csit-verify-hw-perf-1606-long and post processed into wiki format. Original RF report source files are available at Jenkins Job [https://jenkins.fd.io/view/csit/job/csit-vpp-perf-1606-all/ csit-vpp-perf-1606-all]: [https://jenkins.fd.io/view/csit/job/csit-vpp-perf-1606-all/5/artifact/log.html log.html], [https://jenkins.fd.io/view/csit/job/csit-vpp-perf-1606-all/5/artifact/output.xml output.xml], [https://jenkins.fd.io/view/csit/job/csit-vpp-perf-1606-all/5/artifact/report.html report.html]. |

| − | ==== | + | |

| − | ===== | + | In addition VPP DUT performance trending graphs have been posted from LF Jenkins Job csit-vpp-verify-master-semiweekly. |

| − | ======Long | + | |

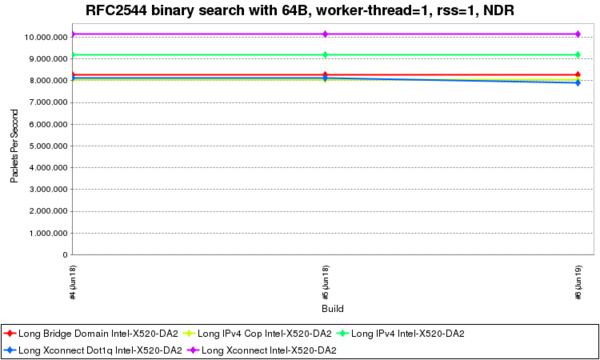

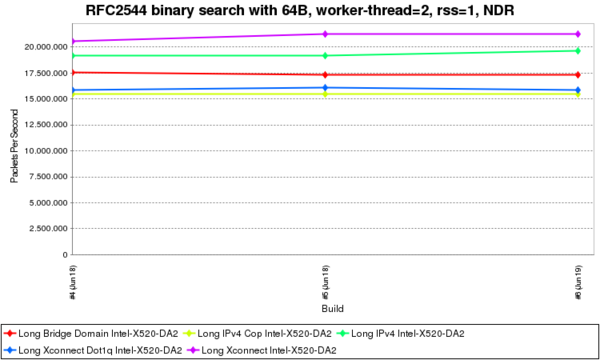

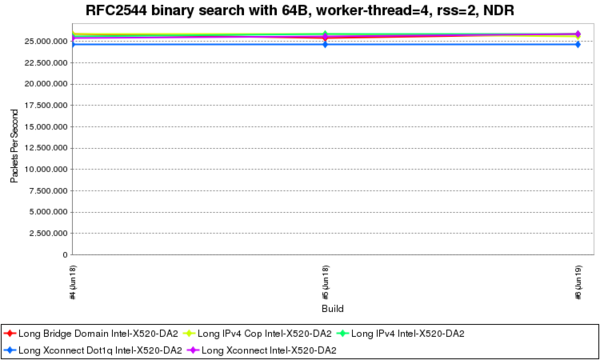

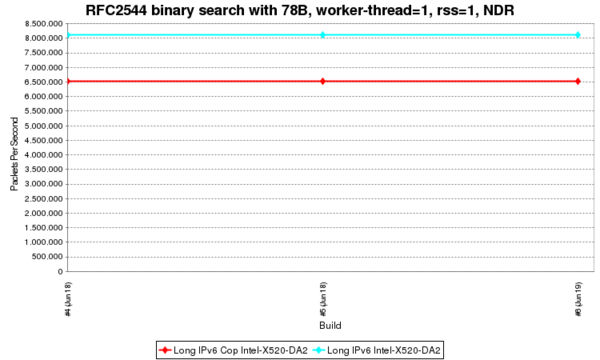

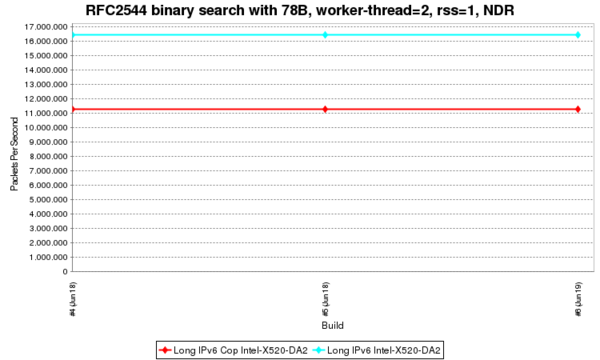

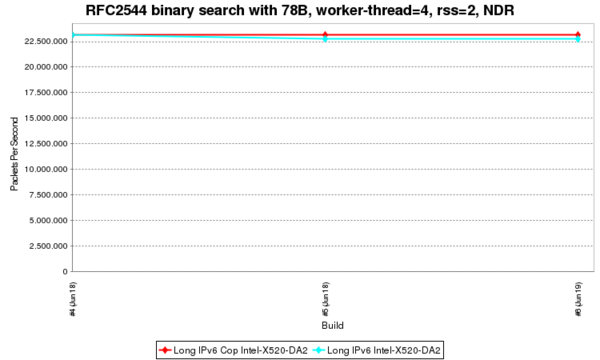

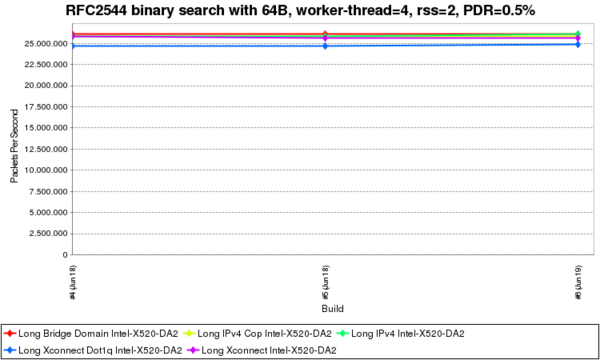

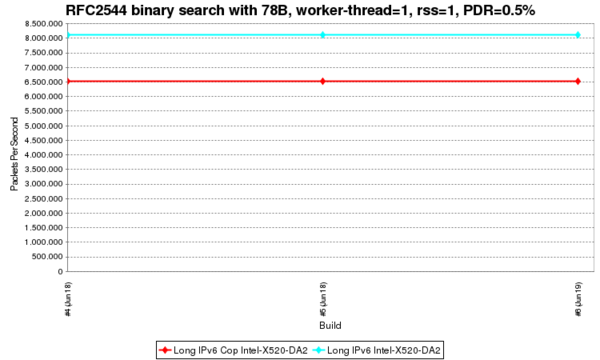

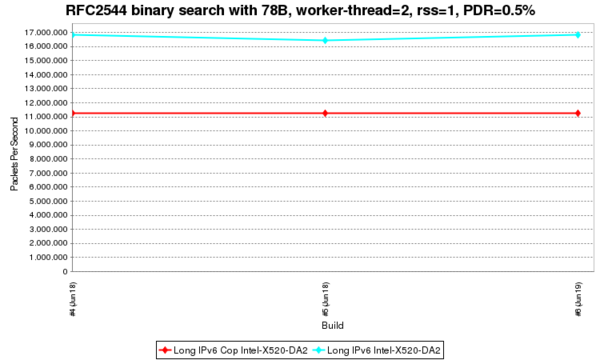

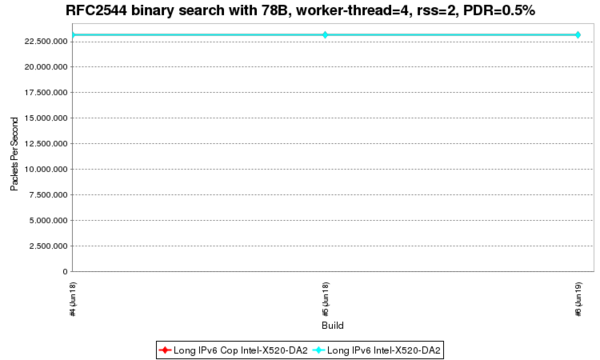

| + | ===VPP Trend Graphs RFC2544:NDR=== | ||

| + | VPP performance trend graphs from LF Jenkins Job csit-vpp-verify-master-semiweekly. | ||

| + | |||

| + | [[Image:Ndr plot1.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 64B, worker-thread=2, rss=1, NDR.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 64B, worker-thread=4, rss=2, NDR.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 78B, worker-thread=1, rss=1, NDR.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 78B, worker-thread=2, rss=1, NDR.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 78B, worker-thread=4, rss=2, NDR.png|border|600px]] | ||

| + | |||

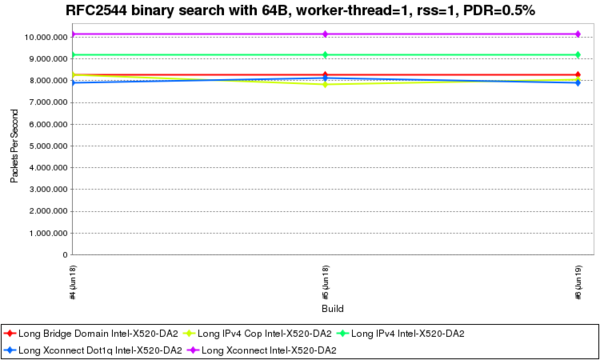

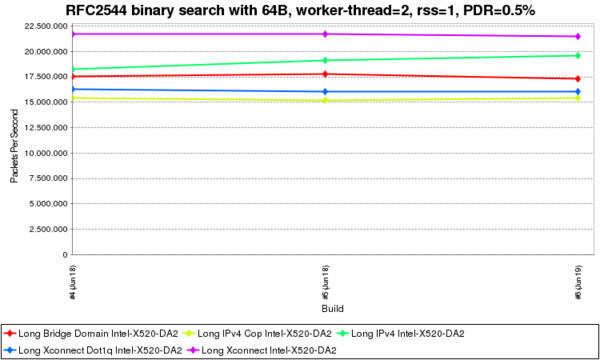

| + | ===VPP Trend Graphs RFC2544:PDR=== | ||

| + | VPP performance trend graphs from LF Jenkins Job csit-vpp-verify-master-semiweekly. | ||

| + | |||

| + | [[Image:RFC2544 binary search with 64B, worker-thread=1, rss=1, PDR.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 64B, worker-thread=2, rss=1, PDR.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 64B, worker-thread=4, rss=2, PDR.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 78B, worker-thread=1, rss=1, PDR.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 78B, worker-thread=2, rss=1, PDR.png|border|600px]] | ||

| + | [[Image:RFC2544 binary search with 78B, worker-thread=4, rss=2, PDR.png|border|600px]] | ||

| + | ===Long Performance Tests - NDR and PDR Search=== | ||

| + | ====Long L2 Cross-Connect Intel-X520-DA2==== | ||

| + | '''RFC2544: Pkt throughput L2XC test cases''' - verify VPP DUT maximum packet throughput performance: | ||

| + | * '''[Top]''' Network Topologies: TG-DUT1-DUT2-TG 3-node circular topology with single links between nodes. | ||

| + | * '''[Enc]''' Packet Encapsulations: Eth-IPv4 for L2 switching of IPv4. | ||

| + | * '''[Cfg]''' DUT configuration: DUT1 and DUT2 are configured with L2 cross-connect. DUT1 and DUT2 tested with 2p10GE NIC X520 Niantic by Intel. | ||

| + | * '''[Ver]''' TG verification: TG finds and reports throughput NDR (Non Drop Rate) with zero packet loss tolerance or throughput PDR (Partial Drop Rate) with non-zero packet loss tolerance (LT) expressed in percentage of packets transmitted. NDR and PDR are discovered for different Ethernet L2 frame sizes using either binary search or linear search algorithms with configured starting rate and final step that determines throughput measurement resolution. Test packets are generated by TG on links to DUTs. TG traffic profile contains two L3 flow-groups (flow-group per direction, 253 flows per flow-group) with all packets containing Ethernet header, IPv4 header with IP protocol=61 and static payload. MAC addresses are matching MAC addresses of the TG node interfaces. | ||

| + | * '''[Ref]''' Applicable standard specifications: RFC2544. | ||

| + | |||

{| class="wikitable" | {| class="wikitable" | ||

!Name!!Documentation!!Message!!Status | !Name!!Documentation!!Message!!Status | ||

|- | |- | ||

| − | |TC01: 64B NDR binary search - DUT | + | |TC01: 64B NDR binary search - DUT L2XC - 1thread 1core 1rxq |

| − | |[Cfg] DUT runs | + | |[Cfg] DUT runs L2XC switching config with 1 thread, 1 phy core, 1 receive queue per NIC port. [Ver] Find NDR for 64 Byte frames using binary search start at 10GE linerate, step 100kpps. |

| − | using binary search start at 10GE linerate, step 100kpps. | + | |FINAL_RATE: 10130952.125 pps (2x 5065476.0625 pps) FINAL_BANDWIDTH: 6.807999828 Gbps |

| − | |FINAL_RATE: | + | |

|PASS | |PASS | ||

|- | |- | ||

| − | |TC02: 64B PDR binary search - DUT | + | |TC02: 64B PDR binary search - DUT L2XC - 1thread 1core 1rxq |

| − | |[Cfg] DUT runs | + | |[Cfg] DUT runs L2XC switching config with 1 thread, 1 phy core, 1 receive queue per NIC port. [Ver] Find PDR for 64 Byte frames using binary search start at 10GE linerate, step 100kpps, LT=0.5%. |

| − | using binary search start at 10GE linerate, step 100kpps, LT=0.5%. | + | |FINAL_RATE: 10130952.125 pps (2x 5065476.0625 pps) FINAL_BANDWIDTH: 6.807999828 Gbps |

| − | |FINAL_RATE: | + | |

| − | + | ||

|PASS | |PASS | ||

|- | |- | ||

| − | |TC03: 1518B NDR binary search - DUT | + | |TC03: 1518B NDR binary search - DUT L2XC - 1thread 1core 1rxq |

| − | |[Cfg] DUT runs | + | |[Cfg] DUT runs L2XC switching config with 1 thread, 1 phy core, 1 receive queue per NIC port. [Ver] Find NDR for 1518 Byte frames using binary search start at 10GE linerate, step 10kpps. |

| − | using binary search start at 10GE linerate, step 10kpps. | + | |

|FINAL_RATE: 1625486.0 pps (2x 812743.0 pps) FINAL_BANDWIDTH: 19.999979744 Gbps | |FINAL_RATE: 1625486.0 pps (2x 812743.0 pps) FINAL_BANDWIDTH: 19.999979744 Gbps | ||

|PASS | |PASS | ||

|- | |- | ||

| − | |TC04: 1518B PDR binary search - DUT | + | |TC04: 1518B PDR binary search - DUT L2XC - 1thread 1core 1rxq |

| − | |[Cfg] DUT runs | + | |[Cfg] DUT runs L2XC switching config with 1 thread, 1 phy core, 1 receive queue per NIC port. [Ver] Find PDR for 1518 Byte frames using binary search start at 10GE linerate, step 10kpps, LT=0.5%. |

| − | using binary search start at 10GE linerate, step 10kpps, LT=0.5%. | + | |FINAL_RATE: 1625486.0 pps (2x 812743.0 pps) FINAL_BANDWIDTH: 19.999979744 Gbps |

| − | |FINAL_RATE: 1625486.0 pps (2x 812743.0 pps) FINAL_BANDWIDTH: 19.999979744 Gbps | + | |

| − | + | ||

|PASS | |PASS | ||

|- | |- | ||

| − | |TC05: 9000B NDR binary search - DUT | + | |TC05: 9000B NDR binary search - DUT L2XC - 1thread 1core 1rxq |

| − | |[Cfg] DUT runs | + | |[Cfg] DUT runs L2XC switching config with 1 thread, 1 phy core, 1 receive queue per NIC port. [Ver] Find NDR for 9000 Byte frames using binary search start at 10GE linerate, step 5kpps. |

| − | using binary search start at 10GE linerate, step 5kpps. | + | |

|FINAL_RATE: 277160.0 pps (2x 138580.0 pps) FINAL_BANDWIDTH: 19.9998656 Gbps | |FINAL_RATE: 277160.0 pps (2x 138580.0 pps) FINAL_BANDWIDTH: 19.9998656 Gbps | ||

|PASS | |PASS | ||

|- | |- | ||

| − | |TC06: 9000B PDR binary search - DUT | + | |TC06: 9000B PDR binary search - DUT L2XC - 1thread 1core 1rxq |

| − | |[Cfg] DUT runs | + | |[Cfg] DUT runs L2XC switching config with 1 thread, 1 phy core, 1 receive queue per NIC port. [Ver] Find PDR for 9000 Byte frames using binary search start at 10GE linerate, step 5kpps, LT=0.5%. |

| − | using binary search start at 10GE linerate, step 5kpps, LT=0.5%. | + | |FINAL_RATE: 277160.0 pps (2x 138580.0 pps) FINAL_BANDWIDTH: 19.9998656 Gbps |

| − | |FINAL_RATE: 277160.0 pps (2x 138580.0 pps) FINAL_BANDWIDTH: 19.9998656 Gbps | + | |

| − | + | ||

|PASS | |PASS | ||

|- | |- | ||