Difference between revisions of "VPPHostStack"

Florin.coras (Talk | contribs) (→Tutorials/Test Apps) |

Florin.coras (Talk | contribs) (→Tutorials/Test Apps) |

||

| Line 52: | Line 52: | ||

== Tutorials/Test Apps == | == Tutorials/Test Apps == | ||

| − | [[VPP/HostStack/TestHttpServer|Test HTTP Server]] | + | [[VPP/HostStack/TestHttpServer|Test HTTP Server App]] |

| − | [[VPP/HostStack/TestProxy|Test Proxy]] | + | [[VPP/HostStack/TestProxy|Test Proxy App]] |

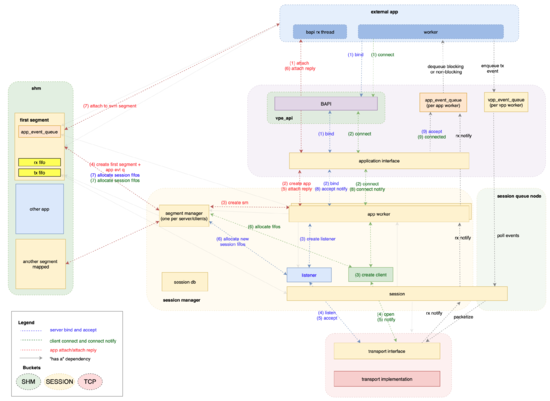

== Session Layer Architecture == | == Session Layer Architecture == | ||

Revision as of 00:56, 7 March 2018

Contents

Description

VPP's host stack is a user space implementation of a number of transport, session and application layer protocols that leverages VPP's existing protocol stack. It roughly consists of four major components:

- Session Layer that accepts pluggable transport protocols

- Shared memory mechanisms for pushing data between VPP and applications

- Transport protocol implementations (e.g. TCP, SCTP, UDP)

- Comms Library (VCL) and LD_PRELOAD Library

Start Here

Set Up Dev Environment - Explains how to set up a VPP development environment and the requirements for using the build tools

Getting Started

Applications can link against the following APIs for host-stack service:

- Builtin C API. It can only be used by applications hosted within VPP

- "Raw" session layer API. It does not offer any support for async communication

- VCL API that offers a POSIX-like interface. It comes with its own epoll implementation.

- POSIX API through LD_PRELOAD

A number of test applications can be used to exercise these APIs. For all the examples below, it assumed that two VPP instances have been brought up and properly configured to ensure networking connectivity between them. To test that network connectivity is available, the builtin ping tool can be used. As a convention, we consider the first vpp instance (vpp1) to be the one the server is attached to and the second instance (vpp2) to be the one where the client application is attached. For illustrative purposes all examples use TCP as a transport protocol but other available protocols could be used.

Builtin Echo Server/Client

On vpp1, from the cli do:

# test echo server uri tcp://vpp1_ip/port

and on vpp2t:

# test echo client uri tcp://vpp1_ip/port

For more details on how to further configure the client/server apps to do throughput and CPS testing see here

External Echo Server/Client

To build the external test echo apps, before building edit vnet.am to make test apps installable

# sed -i 's/noinst_PROGRAMS += tcp_echo udp_echo/bin_PROGRAMS += tcp_echo udp_echo/' src/vnet.am

Start vpp1 and attach the server application:

$ ./build-root/install-vpp_debug-native/vpp/bin/tcp_echo uri tcp://vpp1_ip/port

Then start vpp2 and attach the client:

$ ./build-root/install-vpp_debug-native/vpp/bin/tcp_echo slave uri tcp://vpp1_ip/port

VCL socket client/server

For more details see the tutorial here

Tutorials/Test Apps

Session Layer Architecture

TBD: walk through

Session Namespaces

In order to constrain the range of communication, applications are supposed to provide at attachment time, the namespace they pertain to and a secret. Namespaces are configured in advance/independently and serve as a means of associating applications to network layer resources like interfaces and fib tables. Therefore they serve as a means to constrain source ips to be used and limit the scope of the routing. If applications request no namespace, they are assigned to the default one, which at its turn uses the default fib and by default has no secret configured.

Multiple namespaces can use the same fib table, in which case local inter-namespace communication uses shared memory fifos. However, if the namespaces use different fib tables, communication can be established only post ip routing, if any fib table/vrf leaking is configured so shared memory communication is not supported anymore. It should be noted that when a sw_if_index is provided for a namespace, zero-ip (INADDR_ANY) binds are converted to binds to the requested interface.

Session Tables

Another option for applications is to provide the scope of their communication within a namespace. That is, they can request a session layer local scope, as opposed to a global scope that requires assistance from transport and network layers. In this mode of communication, shared-memory fifos (cut-through sessions) are used exclusively. Nonetheless, in_addr_any (zero) local host ip addresses must still be provided in the session establishment messages due to existing application idiosyncrasies.

This separation allowed us to define a type of session layer ACLs, something we call session rules, whereby connections are allowed/denied/redirected to applications. The local tables are namespace specific, and can be used for egress session/connection filtering: i.e., connects to a given ip or ip prefix + port can be denied. Whereas, global tables are fib table specific, and can be used for ingress filtering: i.e., incoming connects to ip/port can be allowed or dropped.

The session rules are implemented as a match-mask-action data structure and support longest ip prefix matching and port wildcarding.