VPP Sandbox/router

Contents

Abstract

This document details an experience of building a high-performance open-source OSPFv2 router using the BIRD Internet Routing Daemon (BIRD) and Vector Packet Processing (VPP) technology with the Data Plane Development Kit (DPDK).

Introduction

In building an OSPF router we explored two different methods for interacting with the control plane. First we attempted to listen to Netlink requests using LD_PRELOAD and handling the associated system calls on behalf of the host operating system, but instead responding with the data plane's view of the world. The second method we explored was to create a number of tap interfaces to be associated with each data plane device, and mirroring the Netlink configuration applied to the host operating system onto the data plane. Both of the two methods offered their own set of challenges which are detailed below.

Method 1: LD_PRELOAD

The LD_PRELOAD method required us to implement twelve socket API related system calls such as socket, bind, getsockname, if_nametoindex, sendmsg, recvmsg, etc. Although implementation of the Netlink route protocol as a socket handler itself proved trivial, it becomes complicated due to the associated socket API functionality. When the application using Netlink tries to, for example, select() on a socket, it may provide file descriptors which are not known to the data plane, which adds significant complexity to the implementation as it now has to somehow still select the host operating systems' descriptors and its own while still presenting a unified response to the application.

In addition to the socket API functionality, the LD_PRELOAD method becomes complicated to due process management. Each application is essentially a data plane client, which has to be connected and disconnected. Doing these in constructors and destructors does not work well when the process calls fork, or exits in error, or calls execve. Another challenge with fork is that the process' memory is copied for the child, which would normally include Netlink sockets, which somehow must be copied in the data plane from the prior client (the parent) to the new client (the child).

When implementing OSPF using the bird router, we found that the daemon actually wanted to use the information which was provided via Netlink (obviously)! While it was relatively straight forward to present the daemon with lists of links, addresses, and routes which were seamlessly integrated into the internal routing table, running the OSPF protocol proved more challenging. The first thing bird does for an interface configured to use OSPFv2 is to open a raw IPv4 socket and attempt to bind it to the specified interface. Well, the interface names and indices returned by the data-plane do not coincide with the host operating system so that does not work. Therefore, without also handling the raw IP socket in addition to the Netlink socket there is no way for the router daemon to send and receive packets on the interfaces it is required to manage. Much of the IP protocol related functionality is implemented in the data plane so it may seem reasonable to hook up a packet transfer interface to the binary API, however this comes with the same complexities as the sockets interface, and additional ones due to IP options and IP multicast. Especially when considering that BGP uses TCP, it seems the more appropriate thing to do is to take advantage of the sockets and TCP/IP stack in the host operating system wherever possible.

Using the LD_PRELOAD method, the data plane can create a tap per data plane interface, replacing references to the data plane interface names and indices with those of the tap each time an application makes a respective socket API call. Doing so ultimately requires an implementation of a Netlink server, as well as functionality to mirror the Netlink configuration to the host operating system (since the sockets API calls will fail if, e.g. interface addresses do not exist).

This makes the second method, where the data plane listens and mirrors the host configuration on taps, seemingly more straightforward for applications that require sending and receiving packets in addition to simply interacting with Netlink. The LD_PRELOAD method may be more desirable for applications which only handle configuration and do not touch any data, or for providing users with tools for probing the data plane e.g. iproute2 or ethtool, knowing that applications cannot use them.

Method 2: Tap

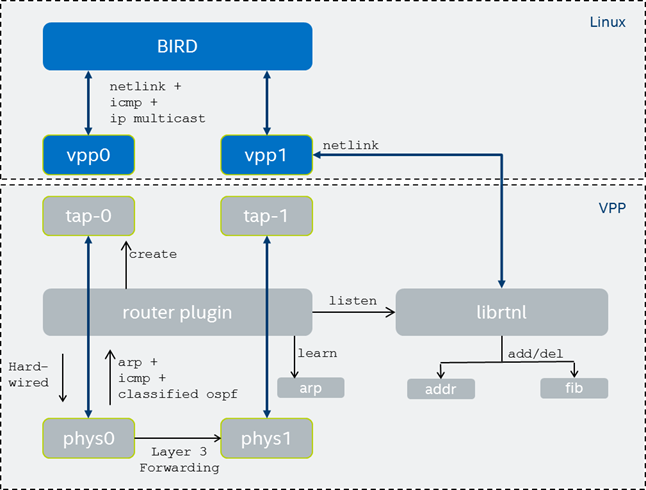

In the tap method the data plane creates a tap interface in the purview of the host operating system for a given data plane device. Each tap is “hardwired” to its data plane device in the Control-to-Data plane direction. The data plane interface is said to be “tapped” at this point, however there will be no traffic directed towards it until configured as such. The data plane classifier is used to direct ingress packets towards a graph node which outputs the selected frames to the appropriate tap in the Data-to-Control plane direction. The currently-under-development Netlink node/plugin maps routes on the taps to the data plane's fib.

The above method is posed with two challenges. First, although all traffic passes from the control plane through the data plane interface, only OSPF traffic is passed back, breaking important protocols like ARP. Using Layer 3 classifier rules will not help us detect ARP, so we have to have a layer 2 classification rule, slowing down the IPv4 forwarding performance along with it, which is not acceptable. Second, for OSPF to function properly, each router needs to know the other is reachable. This is done via ICMP. Therefore local ICMP needs to arrive at the host operating system running the OSPF protocol.

In the first case for handling ARP, we considered two alternatives. The first is to simply mirror the host operating systems' interfaces Netlink neighbor events onto the data plane in addition to the routes, or to insert a node between ethernet-input and arp-input to switch ARP messages for the tapped interfaces and those for the non-tapped interfaces. The second alternative appears unnecessary since we need to mirror routes on the interfaces anyway, we may as well handle neighbor/ARP entries in the data plane, too. However, this will not work since the host operating system still needs to resolve the same addresses. So the best alternative is a combination of the two; in the data plane, “learn” or “snoop” neighbor/ARP replies but do not respond to ARP requests directly, instead forwarding ARP to the control plane.

In the second case for handling local ICMP traffic we need to listen for Netlink address messages on the tap devices to learn the local addresses. The local addresses are applied to the tapped data plane interfaces. We can use ip4_register_protocol() to register a local input handler. For tapped interfaces redirect ICMP to the tap, and for untapped interfaces to ip4-icmp-input.

Design

The router is implemented as a plugin. Shown below is a diagram of the various components.

The plugin initially does nothing. Once an interface is tapped (via the CLI), the plugin creates a tap with a given name and the interface’s mac address, hard-wiring its input node to the interfaces output node, and if configured to do so, registering input nodes for ARP using ethernet_register_input_type(). The ARP node uses a hybrid method as proposed above. For tapped interfaces, ARP requests are redirected to its tap via interface-output, or for untapped interfaces to arp-input. ARP replies are "learned" before being sent to the tap interface. This way the control plane will receive ARP replies for requests sent by itself and by the data plane. The data plane will also learn of all address resolutions sent by itself and by the control plane.

For the data plane to function as a router using the host as the control plane, the addresses, neighbors, and routes must be mirrored from the taps to the data plane. This is accomplished using the librtnl Netlink library.

The following command instructs the data plane to redirect ARP traffic to the tap interface named vpp0. In case the tap does not exist, it is created with the HW Address configured the same as the tapped interface. ARP replies are learned as described above.

$ vppctl enable tap-inject

When configured to do so, packets with a destination IP address matching that of a tapped interface will be directed to the tap. This allows local traffic, e.g. ICMP, to be handled by the control plane. The plugin creates a librtnl/netns object to listen for netlink address and route updates, adding/deleting addresses and routes from interfaces as required.

$ vppctl enable tap-inject

Packets can be directed to a tap interface from the classifier. The following commands direct OSPF traffic to the node. Packets whose ingress interface is tapped will be redirected to its tap interface.

$ vppctl classify table mask l3 ip4 proto table table 0

$ vppctl ip route add 224.0.0.4/30 via classify 0

$ vppctl ip route add 224.0.0.22/32 via classify 0

$ vppctl classify session hit-next node tap-inject-neighbor \

table-index 0 match l3 ip4 proto 89

$ vppctl classify session hit-next node tap-inject-neighbor \

table-index 0 match l3 ip4 proto 2

Example Configuration

The following can be used to configure an OSPFv2 router with a single 40GbE WAN interface connected to another OSPF router and 4x 10GbE LAN interfaces.

ARP resolutions and IPv4 interface addresses and routes are learned from each of the wan and lan taps and applied to the physical interfaces. Local IPv4 ICMP traffic is redirected to the taps. IPv4 traffic with destination 224.0.0.4/30 and protocol 89 (OSPF) or 224.0.0.22/32 and protocol 2 (IGMP), and local OSPF traffic are redirected only for the WAN.

$ vppctl tap inject arp,icmp4,ospfv2 from FortyGigabitEthernet2/0/0 as wan0

$ vppctl tap inject arp,icmp4 from TenGigabitEthernet4/0/0 as lan0

$ vppctl tap inject arp,icmp4 from TenGigabitEthernet4/0/1 as lan1

$ vppctl tap inject arp,icmp4 from TenGigabitEthernet4/0/2 as lan2

$ vppctl tap inject arp,icmp4 from TenGigabitEthernet4/0/3 as lan3

$ cat >./bird.conf<<EOF

log stderr all;

debug protocols all;

router id 1.1.1.1;

protocol kernel {

import none;

export all;

};

protocol device { };

protocol ospf {

area 0 {

interface "wan0" { ttl security tx only; };

interface "lan0" { stub; };

interface "lan1" { stub; };

interface "lan2" { stub; };

interface "lan3" { stub; };

};

};

EOF

$ bird -c ./bird.conf

$ ip addr add 12.34.56.1/30 dev wan0

$ for i in 0 1 2 3; do ip addr add 10$i.0.0.1/8 dev lan$i; done

References

- BIRD Internet Routing Daemon (BIRD): http://bird.network.cz

- Data Plane Development Kit (DPDK): http://dpdk.org

- Netlink: http://man7.org/linux/man-pages/man7/netlink.7.html

- Netlink Route: http://man7.org/linux/man-pages/man7/rtnetlink.7.html

- Socket API: http://man7.org/linux/man-pages/man2/socket.2.html

- Vector Packet Processing (VPP): https://wiki.fd.io/view/VPP

- VPP Netlink Library (librtnl): https://wiki.fd.io/view/VPP_Sandbox