Difference between revisions of "CSIT/AArch64"

From fd.io

< CSIT

GabrielGanne (Talk | contribs) m (→PASS: bad regex: 0/0 is a fail ...) |

Juraj.linkes (Talk | contribs) (→FD.io AArch64 Machines) |

||

| (20 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

| + | == FD.io AArch64 Machines == | ||

| + | |||

| + | This is a copy of AArch64 server list published on https://wiki.fd.io/view/VPP/AArch64#Machines, with some small additions and modifications. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Platform | ||

| + | ! Role | ||

| + | ! Status | ||

| + | ! Hostname | ||

| + | ! IP | ||

| + | ! IPMI | ||

| + | ! Cores | ||

| + | ! RAM | ||

| + | ! Ethernet | ||

| + | ! Distro | ||

| + | |- | ||

| + | | [https://www.marvell.com/server-processors/thunderx-arm-processors/ Marvell ThunderX] || VPP dev debug server|| Running || vpp-marvell-dev || 10.30.51.38 || 10.30.50.38 || 96 || 128GB || 3x40GbE QSFP+ / 4x10GbE SFP+ || Ubuntu 18.04.4 | ||

| + | |- | ||

| + | | || CI build server|| Running in Nomad || s53-nomad || 10.30.51.39 || 10.30.50.39 || 96 || 128GB || 3x40GbE QSFP+ / 4x10GbE SFP+ || Ubuntu 18.04.4 | ||

| + | |- | ||

| + | | || CI build server|| Running in Nomad || s54-nomad || 10.30.51.40 || 10.30.50.40 || 96 || 128GB || 3x40GbE QSFP+ / 4x10GbE SFP+ || Ubuntu 18.04.4 | ||

| + | |- | ||

| + | | || CI build server || Running in Nomad || s52-nomad || 10.30.51.65 || 10.30.50.65 || 96 || 256GB || 2xQSFP+ / USB Ethernet || Ubuntu 18.04.4 | ||

| + | |- | ||

| + | | || CI build server || Running in Nomad || s51-nomad || 10.30.51.66 || 10.30.50.66 || 96 || 256GB || 2xQSFP+ / USB Ethernet || Ubuntu 18.04.4 | ||

| + | |- | ||

| + | | || CI build server || Running in Nomad || s49-nomad || 10.30.51.67 || 10.30.50.67 || 96 || 256GB || 2xQSFP+ / USB Ethernet || Ubuntu 18.04.4 | ||

| + | |- | ||

| + | | || CI build server || Running in Nomad || s50-nomad || 10.30.51.68 || 10.30.50.68 || 96 || 256GB || 2xQSFP+ / USB Ethernet || Ubuntu 18.04.4 | ||

| + | |- | ||

| + | | [https://www.marvell.com/server-processors/thunderx2-arm-processors/ Marvell ThunderX2] || VPP device server || Running in Nomad || s27-t13-sut1 || 10.30.51.69 || 10.30.50.69 || 112 || 128GB || 3x40GbE QSFP+ XL710-QDA2 || Ubuntu 18.04.2 | ||

| + | |- | ||

| + | | Huawei TaiShan 2280 || CSIT testbed || Running in CI || s17-t33-sut1 || 10.30.51.36 || 10.30.50.36 || 64 || 128GB || 2x10GbE SFP+ Intel X520-DA2 / 2x25GbE SFP28 Mellanox CX-4 || 18.04.1 | ||

| + | |- | ||

| + | | || CSIT testbed || Running in CI || s18-t33-sut2 || 10.30.51.37 || 10.30.50.37 || 64 || 128GB || 2x10GbE SFP+ Intel X520-DA2 / 2x25GbE SFP28 Mellanox CX-4 || 18.04.1 | ||

| + | |- | ||

| + | | [http://macchiatobin.net/ Marvell MACCHIATObin] || N/A || Decommissioned || s20-t34-sut1 || 10.30.51.41 || 10.30.51.49, then connect to /dev/ttyUSB0 || 4 || 16GB || 2x10GbE SFP+ || Ubuntu 16.04.4 | ||

| + | |- | ||

| + | | || N/A || Decommissioned || s21-t34-sut2 || 10.30.51.42 || 10.30.51.49, then connect to /dev/ttyUSB1 || 4 || 16GB || 2x10GbE SFP+ || Ubuntu 16.04.5 | ||

| + | |- | ||

| + | | || N/A || Decommissioned || fdio-mcbin3 || 10.30.51.43 || 10.30.51.49, then connect to /dev/ttyUSB2 || 4 || 16GB || 2x10GbE SFP+ || Ubuntu 16.04.5 | ||

| + | |- | ||

| + | | || Power Cycler || Operational || || 10.30.50.80 || || || || || | ||

| + | |- | ||

| + | | [https://softiron.com/development-tools/overdrive-1000/ SoftIron OverDrive 1000] || N/A || Decommissioned || softiron-1 || 10.30.51.12 || N/A || 4 || 8GB || || openSUSE | ||

| + | |- | ||

| + | | || N/A || Decommissioned || softiron-2 || 10.30.51.13 || N/A || 4 || 8GB || || openSUSE | ||

| + | |- | ||

| + | | || N/A || Decommissioned || softiron-3 || 10.30.51.14 || N/A || 4 || 8GB || || openSUSE | ||

| + | |- | ||

| + | |} | ||

| + | |||

== CSIT TOI == | == CSIT TOI == | ||

https://wiki.fd.io/view/CSIT/TOIs | https://wiki.fd.io/view/CSIT/TOIs | ||

| Line 13: | Line 66: | ||

| warn against virtualenv --system-site-packages || Merged 2017-12-20 || https://gerrit.fd.io/r/#/c/9428/ | | warn against virtualenv --system-site-packages || Merged 2017-12-20 || https://gerrit.fd.io/r/#/c/9428/ | ||

|} | |} | ||

| + | |||

| + | == Functional VM ENV Setup on Ubuntu 16.04 == | ||

| + | * Install Qemu 2.10 | ||

| + | * Install libvirt 3.6.0 | ||

| + | * Iso image version for VM Ubuntu 16.04.4 | ||

| + | --> http://cdimage.ubuntu.com/releases/16.04/release/ubuntu-16.04.4-server-arm64.iso?_ga=2.63522479.148560393.1520422663-212970241.1510806682 | ||

| + | * Install virt-manager | ||

| + | |||

| + | Steps : | ||

| + | * Spawn VM using Virsh command | ||

| + | >sudo virt-install --name dut1 --ram 4096 --disk path=dut1.img,size=30 --vcpus 2 --os-type linux --os-variant generic --cdrom './ubuntu-16.04.4-server-arm64.iso' --network default | ||

| + | |||

| + | * Install Hwe Kernel | ||

| + | * After VM starts, Stop VM and edit xml to add interface as per below steps. | ||

== TEST for 3-nodes topology == | == TEST for 3-nodes topology == | ||

| Line 190: | Line 257: | ||

<type arch='aarch64' machine='virt-2.9'>hvm</type> | <type arch='aarch64' machine='virt-2.9'>hvm</type> | ||

<loader readonly='yes' type='pflash'>/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw</loader> | <loader readonly='yes' type='pflash'>/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw</loader> | ||

| − | <nvram>/var/lib/libvirt/qemu/nvram/fedora26- | + | <nvram>/var/lib/libvirt/qemu/nvram/fedora26-tg.fd</nvram> |

<boot dev='hd'/> | <boot dev='hd'/> | ||

</os> | </os> | ||

| Line 391: | Line 458: | ||

<type arch='aarch64' machine='virt-2.9'>hvm</type> | <type arch='aarch64' machine='virt-2.9'>hvm</type> | ||

<loader readonly='yes' type='pflash'>/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw</loader> | <loader readonly='yes' type='pflash'>/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw</loader> | ||

| − | <nvram>/var/lib/libvirt/qemu/nvram/fedora26- | + | <nvram>/var/lib/libvirt/qemu/nvram/fedora26-dut1.fd</nvram> |

<boot dev='hd'/> | <boot dev='hd'/> | ||

</os> | </os> | ||

| Line 575: | Line 642: | ||

<type arch='aarch64' machine='virt-2.9'>hvm</type> | <type arch='aarch64' machine='virt-2.9'>hvm</type> | ||

<loader readonly='yes' type='pflash'>/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw</loader> | <loader readonly='yes' type='pflash'>/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw</loader> | ||

| − | <nvram>/var/lib/libvirt/qemu/nvram/fedora26- | + | <nvram>/var/lib/libvirt/qemu/nvram/fedora26-dut2.fd</nvram> |

<boot dev='hd'/> | <boot dev='hd'/> | ||

</os> | </os> | ||

| Line 768: | Line 835: | ||

* nested VM '''disabled''' | * nested VM '''disabled''' | ||

| − | '' There are a total of | + | '' There are a total of 347 VPP CSIT Functional tests'' |

| − | + | [https://docs.google.com/spreadsheets/d/1DeJ7KW3DDDw6z3IZSug21uEgcoQmYjzsAFmtNZGO7O8/edit?usp=sharing Global and detailed test report] | |

| − | == | + | == VPP on ARM Board status == |

| − | + | *Success in binding Vpp on physical interface. | |

| − | + | *Manual Vpp test between 2 boards is ok. | |

| − | + | *Binding of Vpp interface on VM is ok. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

== TODO == | == TODO == | ||

| Line 873: | Line 853: | ||

* patch the bootstrap-* scripts to use on for aarch64 | * patch the bootstrap-* scripts to use on for aarch64 | ||

* add VPP_REPO_URL* VPP_STABLE_VER* files once vpp CI is set up and pushes to nexus | * add VPP_REPO_URL* VPP_STABLE_VER* files once vpp CI is set up and pushes to nexus | ||

| + | * Running on Ubuntu 17.10, TG VM is stable, but scripts are failing, analyze the issue. | ||

== known issues == | == known issues == | ||

# dpdk does not compiles igb_uio on aarch64 (it seems to require a kernel patch introduced in kernel 4.12: f719582435afe9c7985206e42d804ea6aa315d33). it has been re-enabled in dpdk v17.11 (f1810113590373b157ebba555d6b51f38c8ca10f) | # dpdk does not compiles igb_uio on aarch64 (it seems to require a kernel patch introduced in kernel 4.12: f719582435afe9c7985206e42d804ea6aa315d33). it has been re-enabled in dpdk v17.11 (f1810113590373b157ebba555d6b51f38c8ca10f) | ||

# [https://jira.fd.io/browse/CSIT-922 aarch64 VM crash at startup] | # [https://jira.fd.io/browse/CSIT-922 aarch64 VM crash at startup] | ||

| + | # some tests which should raise ''RuntimeError: ICMP echo Rx timeout'' seem to fail with error message as in here [https://github.com/secdev/scapy/issues/56 WARNING: __del__: don't know how to close the file descriptor. Bugs ahead ! Please report this bug.] upgrading to scapy 2.3.3 from 2.3.1 (pip install -U scapy) fixes the issue. | ||

| + | # Qemu 2.5 has issue with pci-bus binding with vpp. | ||

| + | # Running on Ubuntu 16.04, TG VM is not stable(Crash/hang randomly), which cause further most TC failed. | ||

| + | # In Ubuntu 17.10, one common root cause for failures is: "Socket timeout during execution of command: sw_interface_set_flags sw_if_index 2 admin-up" | ||

| + | |||

| + | == Resolved issues on Ubuntu == | ||

| + | # on Host and TG, sometime pcap install(part of requirment.txt) failed. Install libpcap-dev to resolve it.<br /> sudo apt-get install libpcap-dev | ||

| + | # Since not using vagrant config file which contains cmds for passowrd less suders, VPP install, python pkg install, these steps need to do mannually on VMs.<br /> export LANGUAGE=en_US.UTF-8<br /> export LC_ALL=en_US.UTF-8<br /> export LANG=en_US.UTF-8<br /> export LC_TYPE=en_US.UTF-8 <br /> echo "csit ALL=(root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/csit<br /> sudo chmod 0440 /etc/sudoers.d/csit | ||

| + | # Remove ssh password for all VMs<br /> ssh-keygen -t rsa<br /> ssh csit@192.168.122.154 mkdir -p .ssh<br /> ssh-copy-id -i ~/.ssh/id_rsa.pub csit@192.168.122.154<br /> repate for all VMs | ||

| + | # ssh_connect() failed when copy csit tarball from host to VMs<br /> Step 1. Open paramiko transport.py file, which is in below path for me.<br /> /usr/local/lib/python2.7/dist-packages/paramiko<br /> Step 2. in this file search for "name.endswith" and replace iv with empty string.<br /> ------------<br /> /*Code snippet*/<br /> elif name.endswith("-ctr"):<br /> # CTR modes, we need a counter<br /> counter = Counter.new(nbits=self._cipher_info[name]['block-size'] * 8, initial_value=util.inflate_long(iv, True))<br /> return self._cipher_info[name]['class'].new(key, self._cipher_info[name]['mode'], '', counter) <-------------replace iv with empty string | ||

| + | # install qemu 2.10 on ubuntu 16.04:<br /> cat /etc/apt/source.list<br /> deb http://us.ports.ubuntu.com/ubuntu-ports/ artful main universe restricted<br /> deb http://us.ports.ubuntu.com/ubuntu-ports/ artful-updates main universe restricted<br /> deb http://ports.ubuntu.com/ubuntu-ports artful-security main universe restricted<br /> ------------<br /> then install libvirt, qemu, kvm etc.<br /> sudo apt-get install qemu-kvm libvirt-bin<br /> sudo apt-get install virtinst<br /> sudo apt-get upgrade<br /> ------------<br /> setup efi:<br /> sudo apt-get install qemu-system-arm qemu-efi<br /> $ dd if=/dev/zero of=flash0.img bs=1M count=64<br /> $ dd if=/usr/share/qemu-efi/QEMU_EFI.fd of=flash0.img conv=notrunc<br /> $ dd if=/dev/zero of=flash1.img bs=1M count=64<br /> ------------<br /> Issue: search permission for libvirt:<br /> Changing /etc/libvirt/qemu.conf to make things work.<br /> Uncomment user/group to work as root.<br /> Then restart libvirtd:<br /> service libvirtd restart | ||

| + | |||

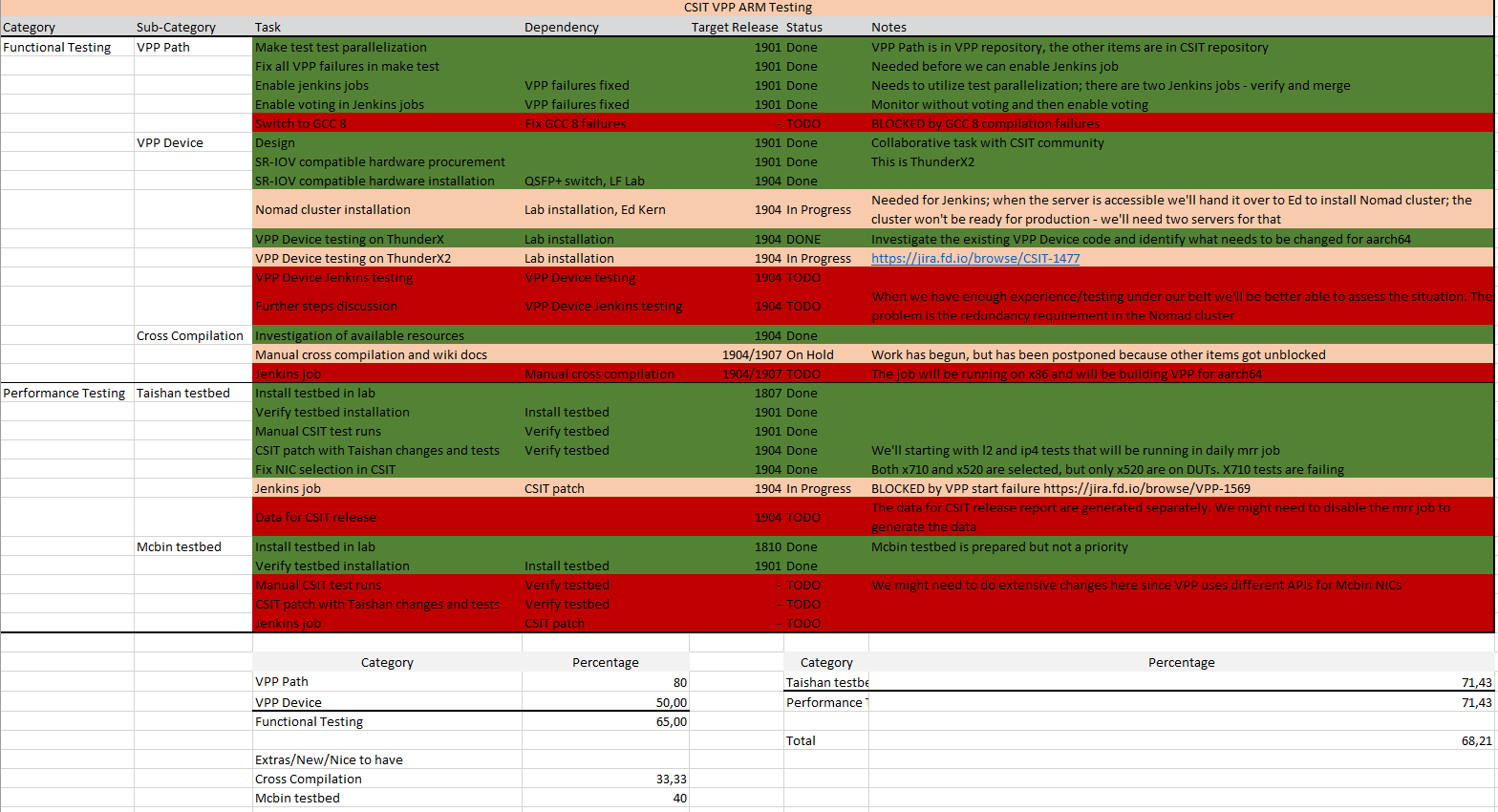

| + | == Status Report == | ||

| + | [[File:CSIT status.PNG]] | ||

Latest revision as of 06:25, 24 September 2020

Contents

FD.io AArch64 Machines

This is a copy of AArch64 server list published on https://wiki.fd.io/view/VPP/AArch64#Machines, with some small additions and modifications.

| Platform | Role | Status | Hostname | IP | IPMI | Cores | RAM | Ethernet | Distro |

|---|---|---|---|---|---|---|---|---|---|

| Marvell ThunderX | VPP dev debug server | Running | vpp-marvell-dev | 10.30.51.38 | 10.30.50.38 | 96 | 128GB | 3x40GbE QSFP+ / 4x10GbE SFP+ | Ubuntu 18.04.4 |

| CI build server | Running in Nomad | s53-nomad | 10.30.51.39 | 10.30.50.39 | 96 | 128GB | 3x40GbE QSFP+ / 4x10GbE SFP+ | Ubuntu 18.04.4 | |

| CI build server | Running in Nomad | s54-nomad | 10.30.51.40 | 10.30.50.40 | 96 | 128GB | 3x40GbE QSFP+ / 4x10GbE SFP+ | Ubuntu 18.04.4 | |

| CI build server | Running in Nomad | s52-nomad | 10.30.51.65 | 10.30.50.65 | 96 | 256GB | 2xQSFP+ / USB Ethernet | Ubuntu 18.04.4 | |

| CI build server | Running in Nomad | s51-nomad | 10.30.51.66 | 10.30.50.66 | 96 | 256GB | 2xQSFP+ / USB Ethernet | Ubuntu 18.04.4 | |

| CI build server | Running in Nomad | s49-nomad | 10.30.51.67 | 10.30.50.67 | 96 | 256GB | 2xQSFP+ / USB Ethernet | Ubuntu 18.04.4 | |

| CI build server | Running in Nomad | s50-nomad | 10.30.51.68 | 10.30.50.68 | 96 | 256GB | 2xQSFP+ / USB Ethernet | Ubuntu 18.04.4 | |

| Marvell ThunderX2 | VPP device server | Running in Nomad | s27-t13-sut1 | 10.30.51.69 | 10.30.50.69 | 112 | 128GB | 3x40GbE QSFP+ XL710-QDA2 | Ubuntu 18.04.2 |

| Huawei TaiShan 2280 | CSIT testbed | Running in CI | s17-t33-sut1 | 10.30.51.36 | 10.30.50.36 | 64 | 128GB | 2x10GbE SFP+ Intel X520-DA2 / 2x25GbE SFP28 Mellanox CX-4 | 18.04.1 |

| CSIT testbed | Running in CI | s18-t33-sut2 | 10.30.51.37 | 10.30.50.37 | 64 | 128GB | 2x10GbE SFP+ Intel X520-DA2 / 2x25GbE SFP28 Mellanox CX-4 | 18.04.1 | |

| Marvell MACCHIATObin | N/A | Decommissioned | s20-t34-sut1 | 10.30.51.41 | 10.30.51.49, then connect to /dev/ttyUSB0 | 4 | 16GB | 2x10GbE SFP+ | Ubuntu 16.04.4 |

| N/A | Decommissioned | s21-t34-sut2 | 10.30.51.42 | 10.30.51.49, then connect to /dev/ttyUSB1 | 4 | 16GB | 2x10GbE SFP+ | Ubuntu 16.04.5 | |

| N/A | Decommissioned | fdio-mcbin3 | 10.30.51.43 | 10.30.51.49, then connect to /dev/ttyUSB2 | 4 | 16GB | 2x10GbE SFP+ | Ubuntu 16.04.5 | |

| Power Cycler | Operational | 10.30.50.80 | |||||||

| SoftIron OverDrive 1000 | N/A | Decommissioned | softiron-1 | 10.30.51.12 | N/A | 4 | 8GB | openSUSE | |

| N/A | Decommissioned | softiron-2 | 10.30.51.13 | N/A | 4 | 8GB | openSUSE | ||

| N/A | Decommissioned | softiron-3 | 10.30.51.14 | N/A | 4 | 8GB | openSUSE |

CSIT TOI

https://wiki.fd.io/view/CSIT/TOIs

Recent Patches

| add new topology parameter: arch | Merged 2018-01-10 | https://gerrit.fd.io/r/#/c/9474/ |

| update nodes dependency | Merged 2018-01-02 | https://gerrit.fd.io/r/#/c/9584/ |

| update vagrant to use ubuntu 16.04 images | https://gerrit.fd.io/r/#/c/8295/ | |

| warn against virtualenv --system-site-packages | Merged 2017-12-20 | https://gerrit.fd.io/r/#/c/9428/ |

Functional VM ENV Setup on Ubuntu 16.04

- Install Qemu 2.10

- Install libvirt 3.6.0

- Iso image version for VM Ubuntu 16.04.4

- Install virt-manager

Steps :

- Spawn VM using Virsh command

>sudo virt-install --name dut1 --ram 4096 --disk path=dut1.img,size=30 --vcpus 2 --os-type linux --os-variant generic --cdrom './ubuntu-16.04.4-server-arm64.iso' --network default

- Install Hwe Kernel

- After VM starts, Stop VM and edit xml to add interface as per below steps.

TEST for 3-nodes topology

setup

Manually duplicate the topology described in topologies/available/vagrant.yaml with manual libvirt setup.

topology file

# Copyright (c) 2016 Cisco and/or its affiliates. # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at: # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # Example file of topology --- metadata: version: 0.1 schema: # list of schema files against which to validate - resources/topology_schemas/3_node_topology.sch.yaml - resources/topology_schemas/topology.sch.yaml tags: [vagrant, 3-node] nodes: TG: type: TG host: 192.168.122.18 arch: aarch64 port: 22 username: root password: rdcolab interfaces: port1: mac_address: "52:54:00:0f:44:12" pci_address: "0000:06:00.0" ip4_address: "192.168.122.19" link: link0 driver: virtio-pci port2: mac_address: "52:54:00:0f:44:13" pci_address: "0000:07:00.0" ip4_address: "192.168.122.20" link: link0 driver: virtio-pci port3: mac_address: "52:54:00:0f:44:14" pci_address: "0000:08:00.0" ip4_address: "192.168.122.21" link: link1 driver: virtio-pci port4: mac_address: "52:54:00:0f:44:15" pci_address: "0000:09:00.0" ip4_address: "192.168.122.22" link: link4 driver: virtio-pci port5: mac_address: "52:54:00:0f:44:16" pci_address: "0000:0a:00.0" ip4_address: "192.168.122.23" link: link2 driver: virtio-pci port6: mac_address: "52:54:00:0f:44:17" pci_address: "0000:0b:00.0" ip4_address: "192.168.122.24" link: link5 driver: virtio-pci DUT1: type: DUT host: 192.168.122.34 arch: aarch64 port: 22 username: root password: rdcolab interfaces: port1: mac_address: "52:54:00:0f:44:22" pci_address: "0000:06:00.0" ip4_address: "192.168.122.35" link: link1 driver: uio_pci_generic port2: mac_address: "52:54:00:0f:44:23" pci_address: "0000:07:00.0" ip4_address: "192.168.122.36" link: link4 driver: uio_pci_generic port3: mac_address: "52:54:00:0f:44:24" pci_address: "0000:08:00.0" ip4_address: "192.168.122.37" link: link3 driver: uio_pci_generic port4: mac_address: "52:54:00:0f:44:25" pci_address: "0000:09:00.0" ip4_address: "192.168.122.38" link: link6 driver: uio_pci_generic DUT2: type: DUT host: 192.168.122.50 arch: aarch64 port: 22 username: root password: rdcolab interfaces: port1: mac_address: "52:54:00:0f:44:32" pci_address: "0000:06:00.0" ip4_address: "192.168.122.51" link: link2 driver: uio_pci_generic port2: mac_address: "52:54:00:0f:44:33" pci_address: "0000:07:00.0" ip4_address: "192.168.122.52" link: link5 driver: uio_pci_generic port3: mac_address: "52:54:00:0f:44:34" pci_address: "0000:08:00.0" ip4_address: "192.168.122.53" link: link3 driver: uio_pci_generic port4: mac_address: "52:54:00:0f:44:35" pci_address: "0000:09:00.0" ip4_address: "192.168.122.54" link: link6 driver: uio_pci_generic

networks

Create 6 links following the schema:

<network connections='2'> <name>link0</name> <uuid>1949aa32-43d5-46f2-b633-4aa6c2c17e59</uuid> <forward mode='nat'> <nat> <port start='1024' end='65535'/> </nat> </forward> <bridge name='virbr8' stp='off' delay='0'/> <mac address='52:54:00:76:75:30'/> <ip address='192.168.121.1' netmask='255.255.255.0'> <dhcp> <range start='192.168.121.2' end='192.168.121.254'/> </dhcp> </ip> </network>

TG file

<domain type='kvm' id='188'> [136/1825] <name>fedora26-tg</name> <uuid>e8f7cd08-dc6f-4b5c-ba6a-d793fb77ed11</uuid> <memory unit='KiB'>4194304</memory> <currentMemory unit='KiB'>4194304</currentMemory> <vcpu placement='static'>2</vcpu> <resource> <partition>/machine</partition> </resource> <os> <type arch='aarch64' machine='virt-2.9'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw</loader> <nvram>/var/lib/libvirt/qemu/nvram/fedora26-tg.fd</nvram> <boot dev='hd'/> </os> <features> <gic version='2'/> </features> <cpu mode='host-passthrough' check='none'/> <clock offset='utc'/> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>destroy</on_crash> <devices> <emulator>/usr/bin/qemu-system-aarch64</emulator> <disk type='file' device='disk'> <driver name='qemu' type='qcow2'/> <source file='/var/kvm/images/fedora26-tg.img'/> <backingStore/> <target dev='sda' bus='scsi'/> <alias name='scsi0-0-0-0'/> <address type='drive' controller='0' bus='0' target='0' unit='0'/> </disk> <controller type='scsi' index='0' model='virtio-scsi'> <alias name='scsi0'/> <address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/> </controller> <controller type='pci' index='0' model='pcie-root'> <alias name='pcie.0'/> </controller> <controller type='pci' index='1' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='1' port='0x8'/> <alias name='pci.1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='2' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='2' port='0x9'/> <alias name='pci.2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='pci' index='3' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='3' port='0xa'/> <alias name='pci.3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/> </controller> <controller type='pci' index='4' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='4' port='0xb'/> <alias name='pci.4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x3'/> </controller> <controller type='pci' index='5' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='5' port='0xc'/> <alias name='pci.5'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x4'/> </controller> <controller type='pci' index='6' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='6' port='0xd'/> <alias name='pci.6'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x5'/> </controller> <controller type='pci' index='7' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='7' port='0xe'/> <alias name='pci.7'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x6'/> </controller> <controller type='pci' index='8' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='8' port='0xf'/> <alias name='pci.8'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x7'/> </controller> <controller type='pci' index='9' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='9' port='0x10'/> <alias name='pci.9'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='10' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='10' port='0x11'/> <alias name='pci.10'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x1'/> </controller> <controller type='pci' index='11' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='11' port='0x12'/> <alias name='pci.11'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x2'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x0'/> </controller> <interface type='network'> <mac address='52:54:00:0f:44:11'/> <source network='default' bridge='virbr0'/> <target dev='vnet0'/> <model type='virtio'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:12'/> <source network='link0' bridge='virbr8'/> <target dev='vnet1'/> <model type='virtio'/> <alias name='net1'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:13'/> <source network='link0' bridge='virbr8'/> <target dev='vnet2'/> <model type='virtio'/> <alias name='net2'/> <address type='pci' domain='0x0000' bus='0x07' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:14'/> <source network='link1' bridge='virbr1'/> <target dev='vnet3'/> <model type='virtio'/> <alias name='net3'/> <address type='pci' domain='0x0000' bus='0x08' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:15'/> <source network='link4' bridge='virbr4'/> <target dev='vnet4'/> <model type='virtio'/> <alias name='net4'/> <address type='pci' domain='0x0000' bus='0x09' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:16'/> <source network='link2' bridge='virbr2'/> <target dev='vnet5'/> <model type='virtio'/> <alias name='net5'/> <address type='pci' domain='0x0000' bus='0x0a' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:17'/> <source network='link5' bridge='virbr5'/> <target dev='vnet6'/> <model type='virtio'/> <alias name='net6'/> <address type='pci' domain='0x0000' bus='0x0b' slot='0x00' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/1'/> <target port='0'/> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/1'> <source path='/dev/pts/1'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-188-fedora26-tg/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='disconnected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <rng model='virtio'> <backend model='random'>/dev/urandom</backend> <alias name='rng0'/> <address type='pci' domain='0x0000' bus='0x05' slot='0x00' function='0x0'/> </rng> </devices> <seclabel type='dynamic' model='selinux' relabel='yes'> <label>system_u:system_r:svirt_t:s0:c24,c908</label> <imagelabel>system_u:object_r:svirt_image_t:s0:c24,c908</imagelabel> </seclabel> <seclabel type='dynamic' model='dac' relabel='yes'> <label>+0:+0</label> <imagelabel>+0:+0</imagelabel> </seclabel> </domain>

DUT1 file

<domain type='kvm' id='189'> <name>fedora26-dut-1</name> <uuid>e8f7cd08-dc6f-4b5c-ba6a-d793fb77ed12</uuid> <memory unit='KiB'>4194304</memory> <currentMemory unit='KiB'>4194304</currentMemory> <vcpu placement='static'>2</vcpu> <resource> <partition>/machine</partition> </resource> <os> <type arch='aarch64' machine='virt-2.9'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw</loader> <nvram>/var/lib/libvirt/qemu/nvram/fedora26-dut1.fd</nvram> <boot dev='hd'/> </os> <features> <gic version='2'/> </features> <cpu mode='host-passthrough' check='none'/> <clock offset='utc'/> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>destroy</on_crash> <devices> <emulator>/usr/bin/qemu-system-aarch64</emulator> <disk type='file' device='disk'> <driver name='qemu' type='qcow2'/> <source file='/var/kvm/images/fedora26-dut-1.img'/> <backingStore/> <target dev='sda' bus='scsi'/> <alias name='scsi0-0-0-0'/> <address type='drive' controller='0' bus='0' target='0' unit='0'/> </disk> <disk type='file' device='cdrom'> <backingStore/> <target dev='sdb' bus='scsi'/> <readonly/> <alias name='scsi0-0-0-1'/> <address type='drive' controller='0' bus='0' target='0' unit='1'/> </disk> <controller type='usb' index='0' model='nec-xhci' ports='8'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/> </controller> <controller type='scsi' index='0' model='virtio-scsi'> <alias name='scsi0'/> <address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/> </controller> <controller type='pci' index='0' model='pcie-root'> <alias name='pcie.0'/> </controller> <controller type='pci' index='1' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='1' port='0x8'/> <alias name='pci.1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='2' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='2' port='0x9'/> <alias name='pci.2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='pci' index='3' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='3' port='0xa'/> <alias name='pci.3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/> </controller> <controller type='pci' index='4' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='4' port='0xb'/> <alias name='pci.4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x3'/> </controller> <controller type='pci' index='5' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='5' port='0xc'/> <alias name='pci.5'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x4'/> </controller> <controller type='pci' index='6' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='6' port='0xd'/> <alias name='pci.6'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x5'/> </controller> <controller type='pci' index='7' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='7' port='0xe'/> <alias name='pci.7'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x6'/> </controller> <controller type='pci' index='8' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='8' port='0xf'/> <alias name='pci.8'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x7'/> </controller> <controller type='pci' index='9' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='9' port='0x10'/> <alias name='pci.9'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x0'/> </controller> <interface type='network'> <mac address='52:54:00:0f:44:21'/> <source network='default' bridge='virbr0'/> <target dev='vnet7'/> <model type='virtio'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:22'/> <source network='link1' bridge='virbr1'/> <target dev='vnet8'/> <model type='virtio'/> <alias name='net1'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:23'/> <source network='link4' bridge='virbr4'/> <target dev='vnet9'/> <model type='virtio'/> <alias name='net2'/> <address type='pci' domain='0x0000' bus='0x07' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:24'/> <source network='link3' bridge='virbr3'/> <target dev='vnet10'/> <model type='virtio'/> <alias name='net3'/> <address type='pci' domain='0x0000' bus='0x08' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:25'/> <source network='link6' bridge='virbr6'/> <target dev='vnet11'/> <model type='virtio'/> <alias name='net4'/> <address type='pci' domain='0x0000' bus='0x09' slot='0x00' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/4'/> <target port='0'/> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/4'> <source path='/dev/pts/4'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-189-fedora26-dut-1/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='disconnected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <rng model='virtio'> <backend model='random'>/dev/urandom</backend> <alias name='rng0'/> <address type='pci' domain='0x0000' bus='0x05' slot='0x00' function='0x0'/> </rng> </devices> <seclabel type='dynamic' model='selinux' relabel='yes'> <label>system_u:system_r:svirt_t:s0:c180,c713</label> <imagelabel>system_u:object_r:svirt_image_t:s0:c180,c713</imagelabel> </seclabel> <seclabel type='dynamic' model='dac' relabel='yes'> <label>+0:+0</label> <imagelabel>+0:+0</imagelabel> </seclabel> </domain>

DUT2 file

<domain type='kvm' id='190'> <name>fedora26-dut-2</name> <uuid>e8f7cd08-dc6f-4b5c-ba6a-d793fb77ed13</uuid> <memory unit='KiB'>4194304</memory> <currentMemory unit='KiB'>4194304</currentMemory> <vcpu placement='static'>2</vcpu> <resource> <partition>/machine</partition> </resource> <os> <type arch='aarch64' machine='virt-2.9'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/edk2/aarch64/QEMU_EFI-pflash.raw</loader> <nvram>/var/lib/libvirt/qemu/nvram/fedora26-dut2.fd</nvram> <boot dev='hd'/> </os> <features> <gic version='2'/> </features> <cpu mode='host-passthrough' check='none'/> <clock offset='utc'/> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>destroy</on_crash> <devices> <emulator>/usr/bin/qemu-system-aarch64</emulator> <disk type='file' device='disk'> <driver name='qemu' type='qcow2'/> <source file='/var/kvm/images/fedora26-dut-2.img'/> <backingStore/> <target dev='sda' bus='scsi'/> <alias name='scsi0-0-0-0'/> <address type='drive' controller='0' bus='0' target='0' unit='0'/> </disk> <disk type='file' device='cdrom'> <backingStore/> <target dev='sdb' bus='scsi'/> <readonly/> <alias name='scsi0-0-0-1'/> <address type='drive' controller='0' bus='0' target='0' unit='1'/> </disk> <controller type='usb' index='0' model='nec-xhci' ports='8'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/> </controller> <controller type='scsi' index='0' model='virtio-scsi'> <alias name='scsi0'/> <address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/> </controller> <controller type='pci' index='0' model='pcie-root'> <alias name='pcie.0'/> </controller> <controller type='pci' index='1' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='1' port='0x8'/> <alias name='pci.1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='2' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='2' port='0x9'/> <alias name='pci.2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='pci' index='3' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='3' port='0xa'/> <alias name='pci.3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/> </controller> <controller type='pci' index='4' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='4' port='0xb'/> <alias name='pci.4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x3'/> </controller> <controller type='pci' index='5' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='5' port='0xc'/> <alias name='pci.5'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x4'/> </controller> <controller type='pci' index='6' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='6' port='0xd'/> <alias name='pci.6'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x5'/> </controller> <controller type='pci' index='7' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='7' port='0xe'/> <alias name='pci.7'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x6'/> </controller> <controller type='pci' index='8' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='8' port='0xf'/> <alias name='pci.8'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x7'/> </controller> <controller type='pci' index='9' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='9' port='0x10'/> <alias name='pci.9'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x0'/> </controller> <interface type='network'> <mac address='52:54:00:0f:44:31'/> <source network='default' bridge='virbr0'/> <target dev='vnet12'/> <model type='virtio'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:32'/> <source network='link2' bridge='virbr2'/> <target dev='vnet13'/> <model type='virtio'/> <alias name='net1'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:33'/> <source network='link5' bridge='virbr5'/> <target dev='vnet14'/> <model type='virtio'/> <alias name='net2'/> <address type='pci' domain='0x0000' bus='0x07' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:34'/> <source network='link3' bridge='virbr3'/> <target dev='vnet15'/> <model type='virtio'/> <alias name='net3'/> <address type='pci' domain='0x0000' bus='0x08' slot='0x00' function='0x0'/> </interface> <interface type='network'> <mac address='52:54:00:0f:44:35'/> <source network='link6' bridge='virbr6'/> <target dev='vnet16'/> <model type='virtio'/> <alias name='net4'/> <address type='pci' domain='0x0000' bus='0x09' slot='0x00' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/8'/> <target port='0'/> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/8'> <source path='/dev/pts/8'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-190-fedora26-dut-2/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='disconnected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <rng model='virtio'> <backend model='random'>/dev/urandom</backend> <alias name='rng0'/> <address type='pci' domain='0x0000' bus='0x05' slot='0x00' function='0x0'/> </rng> </devices> <seclabel type='dynamic' model='selinux' relabel='yes'> <label>system_u:system_r:svirt_t:s0:c83,c262</label> <imagelabel>system_u:object_r:svirt_image_t:s0:c83,c262</imagelabel> </seclabel> <seclabel type='dynamic' model='dac' relabel='yes'> <label>+0:+0</label> <imagelabel>+0:+0</imagelabel> </seclabel> </domain>

running the tests

- from csit repository, create virtualenv following csit README

- virtualenv env

- source env/bin/activate

- pip install -r requirements.txt

- export PATH with vpp_api_test, and PYTHONPATH

cmdline to run functional tests only with debug logs. You need the "__init__.robot" present in the folder you give to pybot.

pybot --debugfile /tmp/debugfile --exitonerror -L TRACE -v TOPOLOGY_PATH:topologies/enabled/topology.yaml tests/vpp/func/

Functional test

Tests status:

- CSIT: 54ad6efd342695d0a7dad5380cc989a8d846f518

- 3-Nodes topology

- On VM only

- honeycomb disabled

- nested VM disabled

There are a total of 347 VPP CSIT Functional tests

Global and detailed test report

VPP on ARM Board status

- Success in binding Vpp on physical interface.

- Manual Vpp test between 2 boards is ok.

- Binding of Vpp interface on VM is ok.

TODO

- identify ARM64 hardware to replicate CSIT repo

- make Jira EPIC for CSIT func

- make Jira EPIC for CSIT performance

- disk-image-builder scripts

- patch the bootstrap-* scripts to use on for aarch64

- add VPP_REPO_URL* VPP_STABLE_VER* files once vpp CI is set up and pushes to nexus

- Running on Ubuntu 17.10, TG VM is stable, but scripts are failing, analyze the issue.

known issues

- dpdk does not compiles igb_uio on aarch64 (it seems to require a kernel patch introduced in kernel 4.12: f719582435afe9c7985206e42d804ea6aa315d33). it has been re-enabled in dpdk v17.11 (f1810113590373b157ebba555d6b51f38c8ca10f)

- aarch64 VM crash at startup

- some tests which should raise RuntimeError: ICMP echo Rx timeout seem to fail with error message as in here WARNING: __del__: don't know how to close the file descriptor. Bugs ahead ! Please report this bug. upgrading to scapy 2.3.3 from 2.3.1 (pip install -U scapy) fixes the issue.

- Qemu 2.5 has issue with pci-bus binding with vpp.

- Running on Ubuntu 16.04, TG VM is not stable(Crash/hang randomly), which cause further most TC failed.

- In Ubuntu 17.10, one common root cause for failures is: "Socket timeout during execution of command: sw_interface_set_flags sw_if_index 2 admin-up"

Resolved issues on Ubuntu

- on Host and TG, sometime pcap install(part of requirment.txt) failed. Install libpcap-dev to resolve it.

sudo apt-get install libpcap-dev - Since not using vagrant config file which contains cmds for passowrd less suders, VPP install, python pkg install, these steps need to do mannually on VMs.

export LANGUAGE=en_US.UTF-8

export LC_ALL=en_US.UTF-8

export LANG=en_US.UTF-8

export LC_TYPE=en_US.UTF-8

echo "csit ALL=(root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/csit

sudo chmod 0440 /etc/sudoers.d/csit - Remove ssh password for all VMs

ssh-keygen -t rsa

ssh csit@192.168.122.154 mkdir -p .ssh

ssh-copy-id -i ~/.ssh/id_rsa.pub csit@192.168.122.154

repate for all VMs - ssh_connect() failed when copy csit tarball from host to VMs

Step 1. Open paramiko transport.py file, which is in below path for me.

/usr/local/lib/python2.7/dist-packages/paramiko

Step 2. in this file search for "name.endswith" and replace iv with empty string.

------------

/*Code snippet*/

elif name.endswith("-ctr"):

# CTR modes, we need a counter

counter = Counter.new(nbits=self._cipher_info[name]['block-size'] * 8, initial_value=util.inflate_long(iv, True))

return self._cipher_info[name]['class'].new(key, self._cipher_info[name]['mode'], , counter) <-------------replace iv with empty string - install qemu 2.10 on ubuntu 16.04:

cat /etc/apt/source.list

deb http://us.ports.ubuntu.com/ubuntu-ports/ artful main universe restricted

deb http://us.ports.ubuntu.com/ubuntu-ports/ artful-updates main universe restricted

deb http://ports.ubuntu.com/ubuntu-ports artful-security main universe restricted

------------

then install libvirt, qemu, kvm etc.

sudo apt-get install qemu-kvm libvirt-bin

sudo apt-get install virtinst

sudo apt-get upgrade

------------

setup efi:

sudo apt-get install qemu-system-arm qemu-efi

$ dd if=/dev/zero of=flash0.img bs=1M count=64

$ dd if=/usr/share/qemu-efi/QEMU_EFI.fd of=flash0.img conv=notrunc

$ dd if=/dev/zero of=flash1.img bs=1M count=64

------------

Issue: search permission for libvirt:

Changing /etc/libvirt/qemu.conf to make things work.

Uncomment user/group to work as root.

Then restart libvirtd:

service libvirtd restart