Difference between revisions of "VPP/Using VPP as a VXLAN Tunnel Terminator"

(note ipv6 support) |

m (typo) |

||

| Line 446: | Line 446: | ||

</pre> | </pre> | ||

| − | For | + | For both VPP and VAT, VXLAN over IPv6 tunnels are created by simply substituting IPv6 addresses for the {code}src{code} and {code}dst{code} fields. |

==== VXLAN Tunnel Tear-Down and Deletion ==== | ==== VXLAN Tunnel Tear-Down and Deletion ==== | ||

Revision as of 19:14, 20 May 2016

This page describes the support in the VPP platform for a Virtual eXtensible LAN (VXLAN).

Contents

- 1 Introduction

- 2 Features

- 3 Architecture

- 4 Configuration and Verification

- 4.1 Configuration Sequence

- 4.1.1 Bridge Domain Creation

- 4.1.2 VXLAN Tunnel Creation and Setup

- 4.1.3 VXLAN Tunnel Tear-Down and Deletion

- 4.1.4 BVI Interface Creation and Setup

- 4.1.5 BVI Interface Tear-Down and Deletion

- 4.1.6 Example Config of BD with BVI/VXLAN-Tunnel/Ethernet-Port

- 4.1.7 Enable/Disable ARP termination of a BD

- 4.1.8 Add/Delete IP to MAC Entry to a BD for ARP Termination

- 4.2 Show Command Output for VXLAN related Information

- 4.3 Packet Trace

- 4.1 Configuration Sequence

- 5 Restrictions

Introduction

A VXLAN provides the features needed to allow L2 bridge domains (BDs) to span multiple servers. This is done by building an L2 overlay on top of an L3 network underlay using VXLAN tunnels.

This makes it possible for servers to be co-located in the same data center or be separated geographically as long as they are reachable through the underlay L3 network.

You can refer to this kind of L2 overlay bridge domain as a VXLAN (Virtual eXtensible VLAN) segment.

Features

This implementation of support for VXLAN in the VPP engine includes the following features:

- Makes use of the existing VPP L2 bridging and cross-connect functionality.

- Allows creation of VXLAN as per RFC-7348 to extend L2 network over L3 underlay.

- Provides Unicast mode where packet replication done at head end toward remote VTEPS.

- Supports Split Horizon Group (SHG) numbering in packet replication.

- Supports interoperations with a Bridge Virtual Interface (BVI) to allow inter-VXLAN or VLAN packet forwarding via routing.

- Supports VXLAN to VLAN gateway.

- Supports ARP request termination.

- VXLAN over both IPv6 and IPv4 is supported.

VXLAN as defined in RFC 7348 allows VPP bridge domains on multiple servers to be interconnected via VXLAN tunnels to behave as a single bridge domain which can also be called a VXLAN segment. Thus, VMs on multiple servers connected to this bridge domain (or VXLAN segment) can communicate with each other via layer 2 networking.

The functions supported by VPP for VXLAN are described in the following sub-sections.

VXLAN Tunnel Encap and Decap

VXLAN Headers

The VXLAN tunnel encap includes IP, UDP and VXLAN headers as follows:

Outer IPv4 Header: +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |Version| IHL |Type of Service| Total Length | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Identification |Flags| Fragment Offset | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Time to Live |Protocl=17(UDP)| Header Checksum | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Outer Source IPv4 Address | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Outer Destination IPv4 Address | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ Outer UDP Header: +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Source Port | Dest Port = VXLAN Port | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | UDP Length | UDP Checksum | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ VXLAN Header: +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |R|R|R|R|I|R|R|R| Reserved | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | VXLAN Network Identifier (VNI) | Reserved | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ Inner Ethernet Header: +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Inner Destination MAC Address | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Inner Destination MAC Address | Inner Source MAC Address | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Inner Source MAC Address | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |OptnlEthtype = C-Tag 802.1Q | Inner.VLAN Tag Information | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ Payload: +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Ethertype of Original Payload | | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | | Original Ethernet Payload | | | |(Note that the original Ethernet Frame's FCS is not included) | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

The headers are used as follows:

- In the IP header, DIP is the destination server VTEP and SIP is the local server VTEP. After VXLAN header encap, the DIP will be used by the encap server to forward packet to the destination server via L3 routing, assuming routes to all destination VTEP IPs are already set up correctly.

- At destination, the DIP is local and the UDP destination port number let the server identify the packet as a VXLAN packet and start VXLAN decap processing.

- VXLAN decap processing uses the VNI from the VXLAN header to identify how to perform L2 forwarding of the inner Ethernet frame. The VNI is typically associated with a BD in the server where the inner frame should be forwarded.

UDP Port Numbering for VXLAN Header

The UDP destination port number in the VXLAN encap must be 4789, as assigned by IANA for VXLAN. The UDP source port value in the VXLAN encap should be set according to flow-hash of payload Ethernet frame to help with ECMP load-balance as packet is forwarded in the underlay network. The hash would be a normal 5-tuple hash for IP4/6 packets and a 3 tuple SMAC/DMAC/Etype hash for other packet types.

VTEPs and VXLAN Tunnel Creation

Create VXLAN Tunnel with VTEPs

VTEPs (VXLAN Tunnel End Points) are specified via VXLAN tunnel creation – the source and destination IP addresses of each VXLAN tunnel are the local server VTEP address and the destination server VTEP address. The VNI value used for the VXLAN tunnel is also specified on VXLAN tunnel creation. Once a VXLAN tunnel is created, it is like a VPP interface and not yet associated with any BD.

Associate VXLAN Tunnel with BD

Once a VXLAN tunnel interface is created, it can be added to a bridge domain (BD) as a bridge port by specifying its BDID, just like how a local Ethernet interface can be added to a BD. As a VXLAN tunnel is added to a BD, the VNI used for creating the VXLAN tunnel will be mapped to the BDID. It is a good practice to allocate the same value for both VNI and BDID for all VXLAN tunnels on the same BD or VXLAN segment for all servers to prevent confusion.

Connecting VXLAN Tunnels among Multiple Servers

To setup a VXLAN segment or BD over multiple servers, it is recommended that a VPP BD with the same BDID should be created on each server and then a full mesh of VXLAN tunnels among all servers should be created to link up this BD in each server. In other word, on each server with this BD, a VXLAN tunnel with its VNI set to the same value as the BDID should preferably be created for each of the other servers and be added to the BD. Making all BDIDs and VNIs the same value makes VXLAN segment connectivity much more apparent and less confusing.

VXLAN Flooding

VXLAN Unicast Mode with Head-End Replication

As VXLAN tunnel is just a bridge port, the normal packet replication to all bridge ports in a BD will happen naturally. This behavior matches the VXLAN unicast mode operation where headend packet replication is used to flood packets to remote VTEPs. The VXLAN multicast mode, utilizing IP multicast for flooding to remote VTEPs, is NOT supported.

Split Horizon Group

As VXLAN tunnels are added to a BD, they must be configured with the same and non-zero Split Horizon Group (SHG) number. Otherwise, flood packet may loop among servers with the same VXLAN segment because VXLAN tunnels are fully meshed among servers.

VXLAN Tunnel Input and Output with Stats

The VXLAN tunneling implementation supports full interface TX/RX stats with packet and byte counters as follows:

- Packet input from a VXLAN tunnel interface will perform decap of IP/VXLAN header before forwarding L2 forwarded. So packet size show in the RX stats is after decap so excludes IP/UDP/VXLAN header.

- Packet output to VXLAN tunnel interface will perform encap of IP/VXLAN header before being L3 forwarded. So output packet size shown in the TX stats is after encap so includes IP/UDP/VXLAN header.

Most features that can be done on a L2 interface, such as VLAN tag manipulation (or input ACL) can also be applied to VXLAN-tunnel interface.

VXLAN to VLAN Gateway Support

VXLAN to VLAN gateway function can be performed in VPP via the bridge port VLAN tag manipulation function. If an Ethernet port is connected to a VLAN and this port is in promiscuous mode, one can create a sub-interface on this port for the VLAN add this sub-interface to the VXLAN segment or BD with the proper VLAN tag pop/push/rewrite operation to perform VXLAN to VLAN gateway function.

For the case where no other ports are on the VXLAN BD except the VXLAN tunnel and VLAN sub-interface, one can simply cross connect the VXLAN tunnel to the VLAN sub-interface with proper VLAN tag manipulation to become an extremely efficient VXLAN to VLAN gateway.

BVI Support

A bridge domain (BD) can have an L3 Bridge Virtual Interface (BVI) (one per BD) with multiple VXLAN-tunnels and Ethernet bridge ports. All three types of bridge ports (BVI, VXLAN and Ethernet) must interoperate properly to forward traffic to each other. BVI allow VMs on separate BDs or VXLAN segments to reach each other via IRB (Integrated Routing and Bridging).

The BVI for a BD is setup by creating and setup a loopback interface and then add it to the BD as its BVI interface. A BD in a VPP can only have one BVI. A VXLAN segment consists of multiple BDs from multiple VPPs, however, may have multiple BVIs with one BVI on each BD of each VPP.

ARP Request Termination

With VXLAN BD MACs provisioned statically and MAC learning disabled, VPP can perform L2 unicast forwarding very efficiently since unknown unicast flooding is not necessary. With IP4 unicast traffic, however, there is still one source of broadcast traffic due to ARP requests from tenant VMs to find MACs for IP addresses.

In order to minimize flooding of ARP requests to the whole VXLAN segment, VPP will allow control plan to provision any VPP BD with IP addresses and their associated MACs. Thereafter, VPP can utilize the IP and MAC information it has for the BD to terminate ARP requests and generate appropriate ARP responses to the requesting tenant VMs. If no suitable IP/MAC info is found, the ARP request cannot be terminated and so will still be flooded to the VXLAN segment.

Cross Connect instead of Bridging

As an optimization, the VXLAN tunnel interface can also be cross connected to a L2 interface if there are only 2 bridge ports, being the VXLAN tunnel and an Ethernet port, in a BD. The cross connect optimization can improve packet forwarding performance as bridging overhead of MAC learning and lookup will not be necessary.

Instead of using cross connect, it is still possible to optimize BD forwarding with 2 bridge ports if both MAC learning and MAC lookup are disabled. The ability to disable learning is supported in VPP while MAC lookup cannot be disabled at present.

Another usage can be to cross connect two VXLAN tunnels to perform VNI stitching.

Architecture

The following sections explain the design approach behind the various aspects of the VPP VXLAN tunneling implementation.

VXLAN Tunnels

In order to support VXLAN on VPP, the design is to provide the ability to create VXLAN tunnel interface which can be added to a bridge domain as a bride port to participate in L2 forwarding. Thus, the current VPP L2 bridging functionality will naturally interact with VXLAN tunnels as normal bridge ports, perform learning, flooding and unicast forwarding transparently.

It is the VXLAN tunnel code which will handle the encap and decap handling of the forwarded packet as follows:

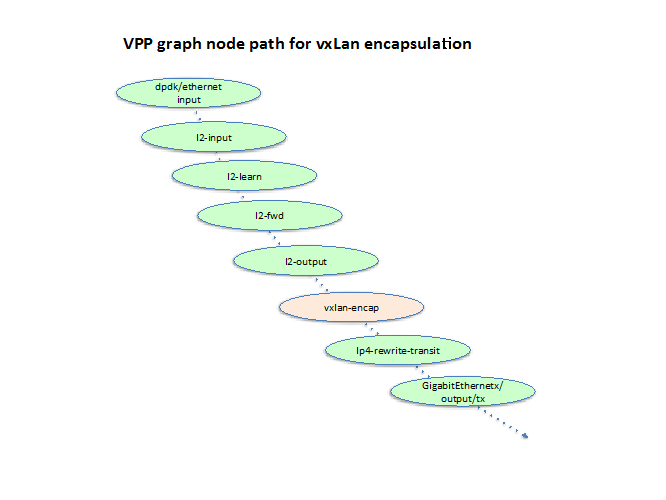

- OUTPUT – as a packet is L2 forwarded in a BD to a VXLAN tunnel, the L2 output for that packet will naturally send it to the VXLAN output node vxlan-encap. This node can then lookup the tunnel control block of the VXLAN tunnel and find the encap string and VRF for IP forwarding. The node can then put the encap string on the packet and setup VRF in the packet context and then send the packet to ip4-looup node to perform forwarding.

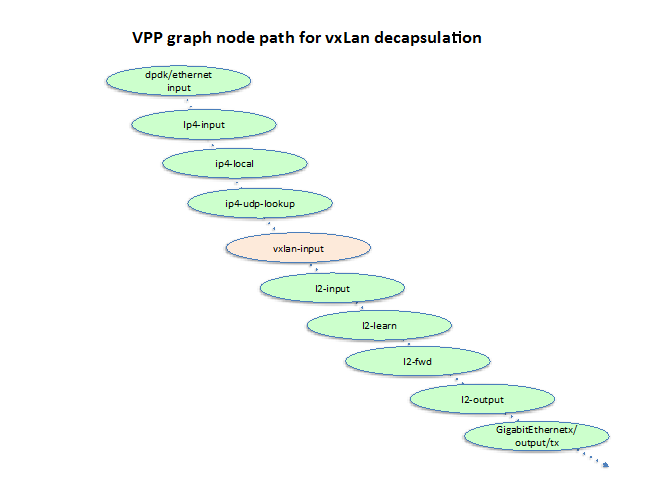

- INPUT – On receiving a VXLAN encap packet with DIP being a local IP (in fact, VPP’s VTEP IP) address, the packet will get to ip4-local node for processing. The ip4-local node will then send the packet to vxlan-input node because the UDP destination port number is for VXLAN (4789). The vxlan-input node will use the SIP and VNI of the outer header to lookup the VXLAN tunnel control block to obtain its sw_if_index, remove the VXLAN header from the packet, setup the sw_if_index of the VXLAN tunnel as input sw_if_index, and finally pass the packet to l2-input node for L2 forwarding.

Note that vxlan-input node does not map VNI to BDID but rather to the sw_if_index of the VXLAN tunnel and set it as the input interface of the decap’ed Ethernet payload. Thereafter, the L2 forwarding of the Ethernet packet just proceeds according to whether it is in a BD or cross connected to another L2 interface. This flexibility will even allow two VXLAN tunnels to be cross connected to each other to allow VNI stitching which may be useful in the future.

The following two diagrams show the relationship of the newly added VXLAN encap node and input(decap) node with the other VPP nodes while forwarding packets, assuming the VXLAN tunnel is configured as an interface on a BD.

ARP Request Termination

The ARP termination feature is added to the L2 feature list with a bit allocated from the feature bitmap correspond to a new graph node l2-arp-term. The arp-term-l2bd node is added to the ARP handling module of VPP to process ARP request packets in the L2 forwarding path. This feature bit will be chosen so that l2-arp-term will be called just before the l2-flood node.

A new API/CLI that allows the user to provide IP and MAC address bindings for a specified BD is also added to the L2 BD handling module of VPP. Each MAC address will be stored in a hash table mac_by_ip4 for the BD using IP address as key.

The classify-and-dispatch path of the l2-input node of VPP is enhanced decide whether the ARP termination bit should be enabled for the incoming packet or not. This check is done in the broadcast MAC classification path only so normal unicast and mcast packet performance is not affected. If a packet is a broadcast ARP packet and ARP termination is enabled for a BD, the ARP termination bit in the feature bitmap will be set for this packet. Thereafter, the packet will go through L2 feature processing in its normal order and hit the ARP termination node. For other packets, or if ARP termination is not enabled in the BD, the ARP termination bit of the packet will be clear so no extra processing overhead is added.

The new graph node arp-term-l2bd will lookup mac_by_ip4 using the ARP request IP as key. If a MAC entry is found, an ARP reply will be sent to the input interface. If entry is not found, the ARP request packet will be flooded normally following the normal L2 broadcast forwarding path.

Loopback Interface MAC

The loopback interface creation CLI/API is enhanced to let user specify MAC address on creation. A new API is also added to allow deletion of a loopback interfaces. Thus, loopback interface can be configured as BVI for BDs with the desired MAC address. If VPP assigned MAC address is used for loopback/BVI, there is a potential for MAC address conflicts when VXLAN tunnels are used to connect multiple BDs, each with its own BVI, among servers.

Software Modules

The VXLAN tunnel modules follows the most up-to-date approach of providing tunnels on VPP, with a c source file for each of encap, decap and creation/deletion function in the VPP workspace directory.

.../vpp/vnet/vnet/vxlan

- encap.c – implement the vxlan-encap node to process packet output to the VXLAN tunnel.

- decap.c – implement the vxlan-input node to process packet received on the VXLAN tunnel.

- vxlan.c – implement the VXLAN tunnel create/delete function to create or delete VXLAN tunnel interfaces and their associated data structure. The debug CLI to create and delete VXLAN tunnel is also provided by this file.

Other header and miscellaneous files present in this directory are as follows:

- vxlan.h – VXLAN tunnel related data structure, function prototypes and inlined body.

- vxlan_packet.h – VXLAN tunnel header related definitions.

- vxlan_error.def – text for VXLAN tunnel related global counters.

The files modified for ARP termination and MAC/IP binding notification are as follows:

- .../vpp/vnet/vnet/l2/l2_input.c/h

- .../vpp/vnet/vnet/l2/l2_bd.c/h

- .../vpp/vnet/vnet/ethernet/arp.c/h

The files modified to allow MAC address to be specified for loopback interface are as follows:

- .../vpp/vnet/vnet/ethernet/ethernet.c/h

- .../vpp/vnet/vnet/ethernet/interface.c

API

A VPP API message is defined in the file .../vpp/vpp/api/vpp.api. During the VPP build process, the vppapigen tool will be built and used to generate proper c typedef struct's as shown the the following sections.

Bridge Domain Creation

The following is the VPP API message definition for creating and deleting bridge domains:

typedef VL_API_PACKED(struct _vl_api_bridge_domain_add_del_tunnel {

u32 client_index;

u32 context;

u32 bd_id;

u8 flood;

u8 uu_flood;

u8 forward;

u8 learn;

u8 arp_term;

u8 is_add;

}) vl_api_bridge_domain_add_del_t;

typedef VL_API_PACKED(struct _vl_api_bridge_domain_add_del_reply {

u16 _vl_msg_id;

u32 context;

i32 retval;

}) vl_api_bridge__add_del_tunnel_reply_t;

In the bridge domain create/delete message, the fields flood, uu_flood, forward, learn and arp-term specify whether each of the relevant features are enabled or not, with 0 indicate disabled and non-zero enabled. The field is_add is set to 1 to add/modify new/existing bridge domain and set to 0 to delete a bridge domain as specified by bd_id value.

VXLAN Tunnel Creation

The following is the VPP API message definition for creating and deleting VXLAN tunnels:

typedef VL_API_PACKED(struct _vl_api_vxlan_add_del_tunnel {

u16 _vl_msg_id;

u32 client_index;

u32 context;

u8 is_add;

u8 is_ipv6;

u8 src_address[16];

u8 dst_address[16];

u32 encap_vrf_id;

u32 decap_next_index;

u32 vni;

}) vl_api_vxlan_add_del_tunnel_t;

typedef VL_API_PACKED(struct _vl_api_vxlan_add_del_tunnel_reply {

u16 _vl_msg_id;

u32 context;

i32 retval;

u32 sw_if_index;

}) vl_api_vxlan_add_del_tunnel_reply_t;

In the tunnel create/delete message, the field decap_next_index should be set to the next index for l2-input node. Next index will default to that for l2-input node if the value specified for decap_next_index in the message is ~0 (0xffffffff). The field next_index can also be set to ip4-input or ip6-input node if payload is IP4/IP6 packet without L2 header but this is not the normal usage of VXLAN tunnel.

The API allows for both IPv6 and IPv4 addresses; In the case of IPv4 the least significant 32 bits of the address field is used.

In the reply message, the sw_if_index of the created VXLAN tunnel is returned which can then be used to add it to a BD or cross connect to another L2 interface.

ARP Termination

The following set bridge flags API message is enhanced to allow ARP termination be enabled or disabled on a BD with a new bit allocated:

typedef VL_API_PACKED(struct _vl_api_bridge_flags {

u16 _vl_msg_id;

u32 client_index;

u32 context;

u32 bd_id;

u8 is_set;

u32 feature_bitmap;

}) vl_api_bridge_flags_t;

typedef VL_API_PACKED(struct _vl_api_bridge_flags_reply {

u16 _vl_msg_id;

u32 context;

u32 retval;

u32 resulting_feature_bitmap;

}) vl_api_bridge_flags_reply_t;

The bits allocated for BD features in feature_bitmap are in .../vpp/vnet/vnet/l2/l2_bd.h:

#define L2_LEARN (1<<0) #define L2_FWD (1<<1) #define L2_FLOOD (1<<2) #define L2_UU_FLOOD (1<<3) #define L2_ARP_TERM (1<<4)

The following is the API message for adding and deleting IP and MAC entries into BDs to support ARP request termination:

typedef VL_API_PACKED(struct _vl_api_bd_ip_mac_add_del {

u16 _vl_msg_id;

u32 client_index;

u32 context;

u32 bd_id;

u8 is_add;

u8 is_ipv6;

u8 ip_address[16];

u8 mac_address[6];

}) vl_api_bd_ip_mac_add_del_t;

typedef VL_API_PACKED(struct _vl_api_bd_ip_mac_add_del_reply {

u16 _vl_msg_id;

u32 context;

i32 retval;

}) vl_api_bd_ip_mac_add_del_reply_t;

The API is designed to be generic to support IPv6 as well. For now, the API call will fail if is_ipv6 is set as IPv6 neighbor discovery termination is not yet supported.

Loopback Interface Creation

The following sample shows the API message for creating and deleting loopback interfaces:

typedef VL_API_PACKED(struct _vl_api_create_loopback {

u16 _vl_msg_id;

u32 client_index;

u32 context;

u8 mac_address[6];

}) vl_api_create_loopback_t;

typedef VL_API_PACKED(struct _vl_api_create_loopback_reply {

u16 _vl_msg_id;

u32 context;

u32 sw_if_index;

i32 retval;

}) vl_api_create_loopback_reply_t;

typedef VL_API_PACKED(struct _vl_api_delete_loopback {

u16 _vl_msg_id;

u32 client_index;

u32 context;

u32 sw_if_index;

}) vl_api_delete_loopback_t;

typedef VL_API_PACKED(struct _vl_api_delete_loopback_reply {

u16 _vl_msg_id;

u32 context;

i32 retval;

}) vl_api_delele_loopback_reply_t;

If the value 0 is used for mac_address, then the default MAC address of dead:0000:000n will be used where n is the loopback instance number.

The sw_if_index field is used to specify the loopback interface to delete.

Note that BD membership or IP addresses of the loopback interface must be cleared before deleting a loopback interface. Otherwise, the VPP may become unstable or even crash. Similarly, sw_if_index used to delete a loopback interface must be the correct one or the result VPP behavior is unpredictable.

Configuration and Verification

The VPP platform is not expected to be used by end users directly. It is expected to be provisioned by control plane or orchestration software using its binary API.

For development or testing purposes, however, the VPP platform does provide command-line debug features that allow control of the VPP engine and that gathers debug information. In addition, the VPE API Test (VAT) tool vpe_api_test with its own set of CLIs can also be used to call VPP APIs to provision the VPP engine while also testing its binary APIs.

This means that a developer who is modifying the control plane can use VAT to try out VPP API calling sequences to make sure that a configuration is provisioned properly.

Configuration Sequence

Be aware that the VPP platform expects the control plane to make API calls in the correct sequence.

The control plane does not perform many error checks for out of sequence calls. This means that making an API call in an incorrect sequence can put the VPP engine into an unpredictable state or may even cause it to crash. It is preferable to use VPE API Test tool instead of using VPP’s debug API directly for testing - especially automated tests. Because the VPE_API Test tool calls the VPP API, all of the VPP API’s associate capabilities including API trace capture, replay, and custom dump can also be used to recreate any situation that lead to VPP issues or crash. The following subsections describe CLI/VAT commands that can be used to control the VPP functions or features and some relevant output helpful to diagnose issues.

Bridge Domain Creation

The following example command shows the configuration sequence to create a bridge domain with BD ID of 13 with learning, forwarding, unknown-unicast flood, flooding enabled and ARP-termination disabled:

VAT commands

bridge_domain_add_del bd_id 13 learn 1 forward 1 uu-flood 1 flood 1 arp-term 0

VPP commands

N/A – as an interface or a vxlan tunnel is added to a bridge domain which does not already exist, it will be created with learning, forwarding, unknown-unicast flood, flooding enabled and ARP-termination disabled by default.

VXLAN Tunnel Creation and Setup

Following is the configuration sequence to create a VXLAN tunnel and put it into a bridge domain with BD ID of 13:

VAT commands

vxlan_add_del_tunnel src 10.0.3.1 dst 10.0.3.3 vni 13 encap-vrf-id 7 decap-next l2 sw_interface_dump sw_interface_set_l2_bridge vxlan_tunnel0 bd_id 13 shg 1

VPP commands

create vxlan tunnel src 10.0.3.1 dst 10.0.3.3 vni 13 encap-vrf-id 7 decap-next l2 set interface l2 bridge vxlan_tunnel0 13 1

For both VPP and VAT, VXLAN over IPv6 tunnels are created by simply substituting IPv6 addresses for the {code}src{code} and {code}dst{code} fields.

VXLAN Tunnel Tear-Down and Deletion

Following is the configuration sequence to delete a VXLAN tunnel which must first be removed from any BD it is attached:

VAT commands

sw_interface_set_l2_bridge vxlan_tunnel0 bd_id 13 disable vxlan_add_del_tunnel src 10.0.3.1 dst 10.0.3.3 vni 13 del

VPP commands

set interface l3 vxlan_tunnel0 create vxlan tunnel src 10.0.3.1 dst 10.0.3.3 vni 13 del

BVI Interface Creation and Setup

Following is the configuration sequence to create a loopback interface, put it into BD 13 as a BVI interface, put it into VRF 5 and assign an IP address with subnet of 6.0.0.250/16:

VAT commands

create_loopback mac 1a:2b:3c:4d:5e:6f sw_interface_dump sw_interface_set_flags admin-up loop0 sw_interface_set_l2_bridge loop0 bd_id 13 shg 0 bvi sw_interface_set_table loop0 vrf 5 sw_interface_add_del_address loop0 6.0.0.250/16

VPP commands

loopback create mac 1a:2b:3c:4d:5e:6f set interface l2 bridge loop0 13 bvi set interface state loop0 up set interface ip table loop0 5 set interface ip address loop0 6.0.0.250/16

BVI Interface Tear-Down and Deletion

Following is the configuration sequence to delete a loopback interface which is the BVI of a BD. Before the deletion, the loopback interface must be first removed from BD together with its IP address/subnet:

VAT commands

sw_interface_add_del_address loop0 6.0.0.250/16 del sw_interface_set_l2_bridge loop0 bd_id 13 bvi disable delete_loopback loop0

VPP commands

set interface ip address loop0 del all set interface l3 loop0 loopback delete loop0

Example Config of BD with BVI/VXLAN-Tunnel/Ethernet-Port

Following is the configuration sequence to create and add a loopback/BVI, a VXLAN tunnel, and an Ethernet interface into a BD:

VAT commands

create_loopback mac 1a:2b:3c:4d:5e:6f vxlan_add_del_tunnel src 10.0.3.1 dst 10.0.3.3 vni 13 encap-vrf-id 7 decap-next l2 sw_interface_dump sw_interface_set_flags admin-up loop0 sw_interface_set_flags admin-up GigabitEthernet2/2/0 sw_interface_set_l2_bridge GigabitEthernet2/2/0 bd_id 13 shg 0 sw_interface_set_l2_bridge vxlan_tunnel0 bd_id 13 shg 1 sw_interface_set_l2_bridge loop0 bd_id 13 shg 0 bvi sw_interface_set_table loop0 vrf 5 sw_interface_add_del_address loop0 6.0.0.250/16

VPP commands

loopback create mac 1a:2b:3c:4d:5e:6f create vxlan tunnel src 10.0.3.1 dst 10.0.3.3 vni 13 encap-vrf-id 7 decap-next l2 set interface state loop0 up set interface state GigabitEthernet2/2/0 up set interface l2 bridge GigabitEthernet2/2/0 13 0 set interface l2 bridge vxlan_tunnel0 13 1 set interface l2 bridge loop0 13 0 bvi set interface ip table loop0 5 set interface ip address loop0 6.0.0.250/16

Enable/Disable ARP termination of a BD

Following is a configuration example to enable or disable ARP termination of a BD:

VAT commands

bridge_flags bd_id 13 arp-term bridge_flags bd_id 13 arp-term clear

VPP commands

set bridge-domain arp term 13 set bridge-domain arp term 13 disable

Add/Delete IP to MAC Entry to a BD for ARP Termination

Following is a configuration example to add or delete an IP to MAC entry to/from a BD:

VAT commands

bd_ip_mac_add_del bd_id 13 7.0.0.11 11:12:13:14:15:16 bd_ip_mac_add_del bd_id 13 7.0.0.11 11:12:13:14:15:16 del

VPP commands

set bridge-domain arp entry 13 7.0.0.11 11:12:13:14:15:16 set bridge-domain arp entry 13 7.0.0.11 11:12:13:14:15:16 del

The following samples show typical output for various VPP show commands. These commands show information relevant to BDs, VXLAN tunnels and stats.

Bridge Domain and Port Info

vpp# show bridge

ID Index Learning U-Forwrd UU-Flood Flooding ARP-Term BVI-Intf

0 0 off off off off off local0

13 1 on on on on on loop0

11 2 on on on on off loop1

vpp# show bridge 11 detail

ID Index Learning U-Forwrd UU-Flood Flooding ARP-Term BVI-Intf

11 2 on on on on off loop1

Interface Index SHG BVI VLAN-Tag-Rewrite

loop1 11 0 * none

GigabitEthernet2/1/0 5 0 - none

vpp# show bridge 13 detail

ID Index Learning U-Forwrd UU-Flood Flooding ARP-Term BVI-Intf

13 1 on on on on on loop0

Interface Index SHG BVI VLAN-Tag-Rewrite

loop0 10 0 * none

GigabitEthernet2/2/0 6 0 - none

vxlan_tunnel0 9 1 - none

IP4 to MAC table for ARP Termination

7.0.0.11 => 11:12:13:14:15:16

7.0.0.10 => 10:11:12:13:14:15

vpp# show bridge 13 int

ID Index Learning U-Forwrd UU-Flood Flooding ARP-Term BVI-Intf

13 1 on on on on on loop0

Interface Index SHG BVI VLAN-Tag-Rewrite

loop0 10 0 * none

GigabitEthernet2/2/0 6 0 - none

vxlan_tunnel0 9 1 - none

vpp# show bridge 13 arp

ID Index Learning U-Forwrd UU-Flood Flooding ARP-Term BVI-Intf

13 1 on on on on on loop0

IP4 to MAC table for ARP Termination

7.0.0.11 => 11:12:13:14:15:16

7.0.0.10 => 10:11:12:13:14:15

VXLAN Tunnel Info

vpp# show vxlan tunnel [0] 10.0.3.1 (src) 10.0.3.3 (dst) vni 13 encap_fib_index 1 decap_next l2

Interface Address and Modes

vpp# show interface address GigabitEthernet2/1/0 (up): l2 bridge bd_id 11 shg 0 GigabitEthernet2/2/0 (up): l2 bridge bd_id 13 shg 0 GigabitEthernet2/3/0 (up): 10.0.3.1/24 table 7 GigabitEthernet2/4/0 (dn): local0 (dn): loop0 (up): l2 bridge bd_id 13 bvi shg 0 6.0.0.250/16 table 5 loop1 (up): l2 bridge bd_id 11 bvi shg 0 7.0.0.250/24 table 5 pg/stream-0 (dn): pg/stream-1 (dn): pg/stream-2 (dn): pg/stream-3 (dn): vxlan_tunnel0 (up): l2 bridge bd_id 13 shg 1

Interface Stats

vpp# show interface

Name Idx State Counter Count

GigabitEthernet2/1/0 5 up rx packets 12

rx bytes 1138

tx packets 9

tx bytes 826

GigabitEthernet2/2/0 6 up tx packets 2

tx bytes 84

GigabitEthernet2/3/0 7 up rx packets 12

rx bytes 1612

tx packets 12

tx bytes 1576

drops 1

ip4 11

GigabitEthernet2/4/0 8 down

local0 0 down

loop0 10 up rx packets 11

rx bytes 848

tx packets 12

tx bytes 1026

drops 3

ip4 9

loop1 11 up rx packets 12

rx bytes 970

tx packets 9

tx bytes 826

drops 3

ip4 11

pg/stream-0 1 down

pg/stream-1 2 down

pg/stream-2 3 down

pg/stream-3 4 down

vxlan_tunnel0 9 up rx packets 11

rx bytes 1002

tx packets 12

tx bytes 1458

Graph Node Global Counters

vpp# show node counters

Count Node Reason

1 arp-input ARP replies sent

4 arp-input ARP replies received

2 arp-term-l2bd ARP replies sent

8 l2-flood L2 flood packets

25 l2-input L2 input packets

14 l2-learn L2 learn packets

2 l2-learn L2 learn misses

12 l2-learn L2 learn hits

13 l2-output L2 output packets

6 vxlan-encap good packets encapsulated

5 vxlan-input good packets decapsulated

5 ip4-arp ARP requests sent

Packet Trace

The following example shows typical output from a packet trace of a ping (an ICMP echo request packet). In the following example, the ping is sent from a port with IP address 7.0.0.2 in a BD (with bd_index 1) to an IP address 6.0.4.4.

A typical VPP command to capture the next 10 packets is:

trace add dpdk-input 10

The VPP command to show packet trace is:

show trace

The destination IP of 6.0.4.4 resides in another BD (with bd_index 0) on another server. Thus, the packet was forwarded from the 1st BD the 2nd BD via BVI and then be sent from the 2nd BD via VXLAN tunnel to the other server. The ICMP echo response was received at the local VTEP IP address and then forwarded in the 2nd BD after VXLAN header decap. The packet was then forwarded from the 2nd BD to 1st BD via BVI and finally output on the port with IP address 7.0.0.2.

Look for output from the vxlan-encap node and vxlan-input node by searching for the strings:

- vxlan-encap

- vxlan-input

Packet 7

17:39:13:781645: dpdk-input

GigabitEthernet2/1/0 rx queue 0

buffer 0x3fdb40: current data 0, length 98, free-list 0, totlen-nifb 0, trace 0x6

PKT MBUF: port 0, nb_segs 1, pkt_len 98

buf_len 2304, data_len 98, ol_flags 0x0

IP4: 00:50:56:88:ca:7e -> de:ad:00:00:00:01

ICMP: 7.0.0.2 -> 6.0.4.4

tos 0x00, ttl 64, length 84, checksum 0x9996

fragment id 0x900d, flags DONT_FRAGMENT

ICMP echo_request checksum 0xbb77

17:39:13:781653: ethernet-input

IP4: 00:50:56:88:ca:7e -> de:ad:00:00:00:01

17:39:13:781658: l2-input

l2-input: sw_if_index 5 dst de:ad:00:00:00:01 src 00:50:56:88:ca:7e

17:39:13:781660: l2-learn

l2-learn: sw_if_index 5 dst de:ad:00:00:00:01 src 00:50:56:88:ca:7e bd_index 1

17:39:13:781662: l2-fwd

l2-fwd: sw_if_index 5 dst de:ad:00:00:00:01 src 00:50:56:88:ca:7e bd_index 1

17:39:13:781664: ip4-input

ICMP: 7.0.0.2 -> 6.0.4.4

tos 0x00, ttl 64, length 84, checksum 0x9996

fragment id 0x900d, flags DONT_FRAGMENT

ICMP echo_request checksum 0xbb77

17:39:13:781669: ip4-rewrite-transit

fib: 2 adjacency: loop0

005056887b68dead000000000800 flow hash: 0x00000000

00000000: 005056887b68dead00000000080045000054900d40003f019a96070000020600

00000020: 04040800bb7719b1000481e02b5500000000aaca0c00000000001011

17:39:13:781671: l2-input

l2-input: sw_if_index 11 dst 00:50:56:88:7b:68 src de:ad:00:00:00:00

17:39:13:781672: l2-fwd

l2-fwd: sw_if_index 10 dst 00:50:56:88:7b:68 src de:ad:00:00:00:00 bd_index 0

17:39:13:781673: l2-output

l2-output: sw_if_index 9 dst 00:50:56:88:7b:68 src de:ad:00:00:00:00

17:39:13:781675: vxlan-encap

VXLAN-ENCAP: tunnel 0 vni 13

17:39:13:781678: ip4-rewrite-transit

fib: 2 adjacency: GigabitEthernet2/3/0

IP4: 00:50:56:88:90:33 -> 00:50:56:88:00:ac flow hash: 0x00000000

IP4: 00:50:56:88:90:33 -> 00:50:56:88:00:ac

UDP: 10.0.3.1 -> 10.0.3.3

tos 0x00, ttl 253, length 134, checksum 0xa363

fragment id 0x0000

UDP: 29806 -> 4789

length 114, checksum 0x0000

17:39:13:781681: GigabitEthernet2/3/0-output

GigabitEthernet2/3/0

IP4: 00:50:56:88:90:33 -> 00:50:56:88:00:ac

UDP: 10.0.3.1 -> 10.0.3.3

tos 0x00, ttl 253, length 134, checksum 0xa363

fragment id 0x0000

UDP: 29806 -> 4789

length 114, checksum 0x0000

17:39:13:781683: GigabitEthernet2/3/0-tx

GigabitEthernet2/3/0 tx queue 0

buffer 0x3fdb40: current data -50, length 148, free-list 0, totlen-nifb 0, trace 0x6

IP4: 00:50:56:88:90:33 -> 00:50:56:88:00:ac

UDP: 10.0.3.1 -> 10.0.3.3

tos 0x00, ttl 253, length 134, checksum 0xa363

fragment id 0x0000

UDP: 29806 -> 4789

length 114, checksum 0x0000

Packet 8

17:39:13:781815: dpdk-input

GigabitEthernet2/3/0 rx queue 0

buffer 0x18eec0: current data 0, length 148, free-list 0, totlen-nifb 0, trace 0x7

PKT MBUF: port 2, nb_segs 1, pkt_len 148

buf_len 2304, data_len 148, ol_flags 0x0

IP4: 00:50:56:88:00:ac -> 00:50:56:88:90:33

UDP: 10.0.3.3 -> 10.0.3.1

tos 0x00, ttl 253, length 134, checksum 0xa363

fragment id 0x0000

UDP: 24742 -> 4789

length 114, checksum 0x0000

17:39:13:781819: ethernet-input

IP4: 00:50:56:88:00:ac -> 00:50:56:88:90:33

17:39:13:781823: ip4-input

UDP: 10.0.3.3 -> 10.0.3.1

tos 0x00, ttl 253, length 134, checksum 0xa363

fragment id 0x0000

UDP: 24742 -> 4789

length 114, checksum 0x0000

17:39:13:781825: ip4-local

fib: 1 adjacency: local 10.0.3.1/24 flow hash: 0x00000000

17:39:13:781827: ip4-udp-lookup

UDP: src-port 24742 dst-port 4789

17:39:13:781830: vxlan-input

VXLAN: tunnel 0 vni 13 next 1 error 0

17:39:13:781832: l2-input

l2-input: sw_if_index 9 dst de:ad:00:00:00:00 src 00:50:56:88:7b:68

17:39:13:781833: l2-learn

l2-learn: sw_if_index 9 dst de:ad:00:00:00:00 src 00:50:56:88:7b:68 bd_index 0

17:39:13:781835: l2-fwd

l2-fwd: sw_if_index 9 dst de:ad:00:00:00:00 src 00:50:56:88:7b:68 bd_index 0

17:39:13:781836: ip4-input

ICMP: 6.0.4.4 -> 7.0.0.2

tos 0x00, ttl 64, length 84, checksum 0x7002

fragment id 0xf9a1

ICMP echo_reply checksum 0xc377

17:39:13:781838: ip4-rewrite-transit

fib: 2 adjacency: loop1

00505688ca7edead000000010800 flow hash: 0x00000000

00000000: 00505688ca7edead00000001080045000054f9a100003f017102060004040700

00000020: 00020000c37719b1000481e02b5500000000aaca0c00000000001011

17:39:13:781840: l2-input

l2-input: sw_if_index 10 dst 00:50:56:88:ca:7e src de:ad:00:00:00:01

17:39:13:781842: l2-fwd

l2-fwd: sw_if_index 11 dst 00:50:56:88:ca:7e src de:ad:00:00:00:01 bd_index 1

17:39:13:781843: l2-output

l2-output: sw_if_index 5 dst 00:50:56:88:ca:7e src de:ad:00:00:00:01

17:39:13:781845: GigabitEthernet2/1/0-output

GigabitEthernet2/1/0

IP4: de:ad:00:00:00:01 -> 00:50:56:88:ca:7e

ICMP: 6.0.4.4 -> 7.0.0.2

tos 0x00, ttl 63, length 84, checksum 0x7102

fragment id 0xf9a1

ICMP echo_reply checksum 0xc377

17:39:13:781846: GigabitEthernet2/1/0-tx

GigabitEthernet2/1/0 tx queue 0

buffer 0x18eec0: current data 50, length 98, free-list 0, totlen-nifb 0, trace 0x7

IP4: de:ad:00:00:00:01 -> 00:50:56:88:ca:7e

ICMP: 6.0.4.4 -> 7.0.0.2

tos 0x00, ttl 63, length 84, checksum 0x7102

fragment id 0xf9a1

ICMP echo_reply checksum 0xc377

Restrictions

The following list of features might be useful to add in order to improve VXLAN functionality. However, these features are not supported in the current implementation:

- VXLAN multicast mode using IP multicast to flood VXLAN segments to other VTEPs.

- MAC aging to clear learned MAC entries which may be stale after a timeout period.