CSIT/VPP Device test design

Contents

Design Proposal: [VPP_Device] Integration Tests

Design Overview

VPP_Device test environment design relies on multiple isolated instances of SUT (i.e. VPP or VPP and nested VMs/containers) running concurrently on the same compute node being exposed to packet traffic load from the local NIC interfaces. Packets are to be generated and received by software Traffic Generator instances (i.e. Scapy) running on the same compute node.

Orchestration and CSIT Integration

VPP and Scapy are running in Linux containers, with their lifecycle being orchestrated by Nomad or K8s.

IP Network layer only for first level of container reachable within physical node (explain?).

Nested containers driven from within CSIT framework, utilizing existing libraries.

Proposal: run pybot on the same physical host where container runs vs. on jenkins slaves (JS) as done currently:

- Pros:

- Eliminates the SSH connectivity issues from JS to Simulated nodes.

- Eliminates the issue with routing K8s CNI outside the physical host.

- Cons:

- Modify bootstraps to move pybot on host. Easy to do.

- Proper cleanup of physical host (can be done by running it in container). Easy to do with extra level of caution.

Open points:

- Nested containers and nested VMs - need to verify restrictions and support for multiple Linux distros.

NIC Sharing

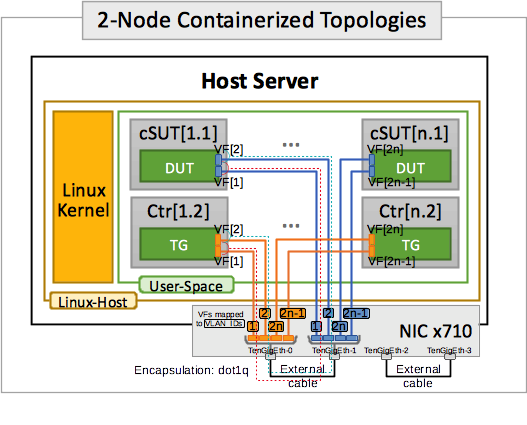

NIC interfaces are shared using Linux vfio_pci and VPP VF drivers: i) DPDK VF driver, ii) Fortville AVF driver.

Provided Intel x710 4p10GE NICs suppport 32 VFs per interface, 128 per NIC.

Open points:

- Need to work out the way to expose VFs to containers and a scheme for deterministic mapping of VFs (coupled with dot1q tag on a wire) and SUT_container and TG_container.

- ARM: Concern about SR-IOV on Macciatobin as current card does not support PCI. ARM team to find the solution and report.

Test Topology

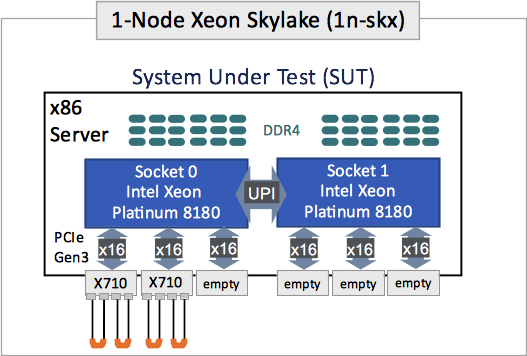

VPP_Device tests run on 1-Node Xeon and 1-Node Arm topologies.

There are two of [1-Node Xeon] topologies each with two of x710NICs, providing 128 VFs for VPP and 128 VFs for Scapy TG.

1-Node Arm topologies are to be defined.

Physical View: 1-Node Xeon Skylake (1n-skx)

Logical View: 2-Node Containerized Topologies

Capacity Planning

Should be driven by increased demand on per patch testing. We need to ensure enough #simulations

- Currently easy to check patch cadence from gerrit/jenkins

- Skylake NIC count is not the bottle neck

- Skylake CPU should be capable of handling more than VIRL (Hasweell vs Skylake, Containers vs VM)

Functional Tests

- VPP NIC VF driver path tests:

- startup.conf config variables.

- (A) num-rx-queues=1..4, num-tx-queues=1..4.

- (B) no-multi-seg=on/off.

- (C) no-tx-checksum-offload=on/off.

- #combinations.

- 4 from 4 (A=1..4) x 1 (B=on) x 1 (C=on).

- 1 from 1 (A=1) x 1 (B=on) x 1 (C=off).

- 1 from 1 (A=1) x 1 (B=off) x 1 (C=on).

- 1 from 1 (A=1) x 1 (B=off) x 1 (C=off).

- 7 in sub-total.

- VPP path combinations.

- ip4base, ip6base, l2bdbasemaclrn.

- 3 in sub-total.

- total test combinations (startup x paths).

- 21 tests (7 x 3).

- startup.conf config variables.

- VPP memif functional tests:

- memif tests.

- baseline 2memif-1docker_container_vpp.

- memif client reconnect.

- 2 in sub-total.

- VPP memif paths.

- ip4base, ip6base, l2bdbasemaclrn.

- 3 for baseline tests.

- 1 for reconnect.

- total test combinations (memif x paths).

- 4 tests (1 x 3 + 1).

- memif tests.

- VPP vhost functional tests:

- vhost tests.

- baseline 2vhost-1vm.

- vhost client reconnect.

- 2 in sub-total.

- VPP vhost paths:

- ip4base, ip6base, l2bdbasemaclrn.

- 3 for baseline tests.

- 1 for reconnect.

- total test combinations (vhost x paths).

- 4 tests (1 x 3 + 1).

- vhost tests.

Support for Linux Distros

Requirement for multiple Linux distribution support:

- ubuntu

- centos

- suse