Difference between revisions of "VPP/HostStack"

Florin.coras (Talk | contribs) (→Description) |

Florin.coras (Talk | contribs) (→Running List of Presentations) |

||

| Line 144: | Line 144: | ||

* FD.io Mini Summit KubeCon NA 2018 [[Media:Fcoras-vpp-hoststack-kc-na18.pdf | slides]] and [https://www.youtube.com/watch?v=3Pp7ytZeaLk video] | * FD.io Mini Summit KubeCon NA 2018 [[Media:Fcoras-vpp-hoststack-kc-na18.pdf | slides]] and [https://www.youtube.com/watch?v=3Pp7ytZeaLk video] | ||

* FD.io Mini Summit KubeCon EU 2019 [[Media:Vpp-hoststack-kc-eu19.pdf | slides]] and [https://youtu.be/5c5ymviBP7k video] | * FD.io Mini Summit KubeCon EU 2019 [[Media:Vpp-hoststack-kc-eu19.pdf | slides]] and [https://youtu.be/5c5ymviBP7k video] | ||

| + | * EnvoyCon 2020 [[Media:using_vpp_as_envoys_network_stack.pdf|slides]] | ||

Revision as of 15:38, 23 October 2020

Contents

Description

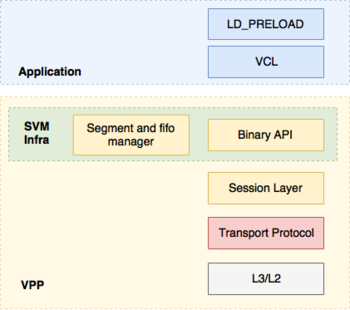

VPP's host stack is a user space implementation of a number of transport, session and application layer protocols that leverages VPP's existing protocol stack. It roughly consists of four major components:

- Session Layer that accepts pluggable transport protocols

- Shared memory mechanisms for pushing data between VPP and applications

- Transport protocol implementations (e.g. TCP, QUIC, TLS, UDP)

- Comms Library (VCL) and LD_PRELOAD Library

Documentation

Set Up Dev Environment - Explains how to set up a VPP development environment and the requirements for using the build tools

Session Layer Architecture - Goes over the main features of the session layer

TLS Application - Describes the TLS Application Layer protocol implementation

Getting Started

Applications can link against the following APIs for host-stack service:

- Builtin C API. It can only be used by applications hosted within VPP

- "Raw" session layer API. It does not offer any support for async communication

- VCL API that offers a POSIX-like interface. It comes with its own epoll implementation.

- POSIX API through LD_PRELOAD

A number of test applications can be used to exercise these APIs. For all the examples below, it is assumed that two VPP instances have been brought up and properly configured to ensure networking connectivity between them. To test that network connectivity is available, the builtin ping tool can be used. As a convention, we consider the first vpp instance (vpp1) to be the one the server is attached to and the second instance (vpp2) to be the one where the client application is attached. For illustrative purposes all examples use TCP as a transport protocol but other available protocols could be used.

Builtin Echo Server/Client

On vpp1, from the cli do:

# test echo server uri tcp://vpp1_ip/port

and on vpp2:

# test echo client uri tcp://vpp1_ip/port

For more details on how to further configure the client/server apps to do throughput and CPS testing see here

External Echo Server/Client (vpp_echo)

The VPP external echo server/client test application (vpp_echo), is a bespoke performance test application which supports all of the VPP HostStack transport layer protocols:

- tcp

- udp

- quic

Vpp_echo utilizes the 'native HostStack APIs' to verify performance and correct handling of connection/stream events with uni-directional and bi-directional streams of data. It can be found in the build tree at

$ ./build-root/build-vpp[_debug]-native/vpp/bin/vpp_echo

and as of VPP 20.01 is included in the main vpp debian package (e.g. vpp_20.01-release-amd64.deb)

Start vpp1 and attach the server application:

$ ./build-root/build-vpp_debug-native/vpp/bin/vpp_echo uri tcp://vpp1_ip/port

Then start vpp2 and attach the client:

$ ./build-root/build-vpp_debug-native/vpp/bin/vpp_echo client uri tcp://vpp1_ip/port

The current command line options for vpp_echo are:

Usage: vpp_echo [socket-name SOCKET] [client|server] [uri URI] [OPTIONS]

Generates traffic and assert correct teardown of the hoststack

socket-name PATH Specify the binary socket path to connect to VPP

use-svm-api Use SVM API to connect to VPP

test-bytes[:assert] Check data correctness when receiving (assert fails on first error)

fifo-size N[K|M|G] Use N[K|M|G] fifos

mq-size N Use mq with N slots for [vpp_echo->vpp] communication

max-sim-connects N Do not allow more than N mq events inflight

rx-buf N[K|M|G] Use N[Kb|Mb|GB] RX buffer

tx-buf N[K|M|G] Use N[Kb|Mb|GB] TX test buffer

appns NAMESPACE Use the namespace NAMESPACE

all-scope all-scope option

local-scope local-scope option

global-scope global-scope option

secret SECRET set namespace secret

chroot prefix PATH Use PATH as memory root path

sclose=[Y|N|W] When stream is done, send[Y]|nop[N]|wait[W] for close

nuris N Cycle through N consecutive (src&dst) ips when creating connections

lcl IP Set the local ip to use as a client (use with nuris to set first src ip)

time START:END Time between evts START & END, events being :

start - Start of the app

qconnect - first Connection connect sent

qconnected - last Connection connected

sconnect - first Stream connect sent

sconnected - last Stream got connected

lastbyte - Last expected byte received

exit - Exiting of the app

rx-results-diff Rx results different to pass test

tx-results-diff Tx results different to pass test

json Output global stats in json

stats N Output stats evry N secs

log=N Set the log level to [0: no output, 1:errors, 2:log]

crypto [engine] Set the crypto engine [openssl, vpp, picotls, mbedtls]

nclients N Open N clients sending data

nthreads N Use N busy loop threads for data [in addition to main & msg queue]

TX=1337[K|M|G]|RX Send 1337 [K|M|G]bytes, use TX=RX to reflect the data

RX=1337[K|M|G] Expect 1337 [K|M|G]bytes

-- QUIC specific options --

quic-setup OPT OPT=serverstream : Client open N connections.

On each one server opens M streams

OPT=default : Client open N connections.

On each one client opens M streams

qclose=[Y|N|W] When connection is done send[Y]|nop[N]|wait[W] for close

uni Use unidirectional streams

quic-streams N Open N QUIC streams (defaults to 1)

Default configuration is :

server nclients 1 [quic-streams 1] RX=64Kb TX=RX

client nclients 1 [quic-streams 1] RX=64Kb TX=64Kb

VCL socket client/server

For more details see the tutorial here

Tutorials/Test Apps

Running List of Presentations

- DPDK Summit North America 2017

- FD.io Mini Summit KubeCon 2017

- FD.io Mini Summit KubeCon Europe 2018

- FD.io Mini Summit KubeCon NA 2018 slides and video

- FD.io Mini Summit KubeCon EU 2019 slides and video

- EnvoyCon 2020 slides