VPP/Use VPP to Chain VMs Using Vhost-User Interface

In all of the examples described below we are connecting a physical interface to a vhost-user interface which is consumed either by a VM image or a Clear Container. The following startup.conf can be used for all of the examples.

unix {

nodaemon

log /var/log/vpp/vpp.log

full-coredump

cli-listen localhost:5002

}

api-trace {

on

}

api-segment {

gid vpp

}

cpu {

main-core 1

corelist-workers 2-6

}

dpdk {

dev 0000:05:00.1

dev 0000:05:00.2

socket-mem 2048,0

no-multi-seg

}

Note the devices described for DPDK, 05:00.1 and 05:00.2. These are specific to the given test setup, describing the NIC provided on the particular host.

Contents

QEMU Instance with two VPP Vhost-User Interfaces

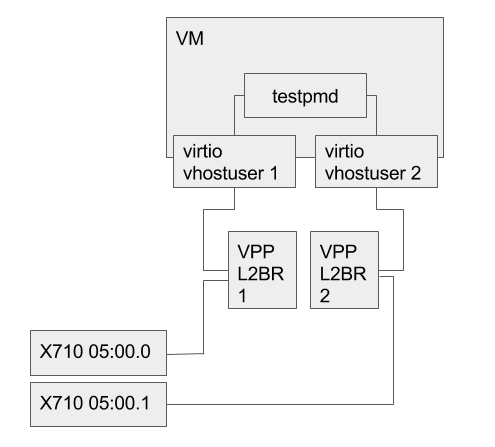

For performance testing, a useful topology is to test between two physical intefaces and through a VM. In our first example, let's look at PHY-> Vhostuser VM -> L2FWD -> Vhostuser VM -> PHY. To achieve this, we'll need two things: a VM with two vhost user interfaces, and a DPDK enabled VM to run a simple port to port forwarding (such as testpmd).

Setup of Network Components on Host

- 1. View interfaces and put into 'up' state

vpp# show interfaces

Name Idx State Counter Count

TenGigabitEthernet5/0/1 1 down

TenGigabitEthernet5/0/2 2 down

vpp# set interface state TenGigabitEthernet5/0/1 up

vpp# set interface state TenGigabitEthernet5/0/2 up

vpp# show interfaces

Name Idx State Counter Count

TenGigabitEthernet5/0/1 1 up

TenGigabitEthernet5/0/2 2 up

- 2. Connect each physical interface to an L2 bridge

vpp# set interface l2 bridge TenGigabitEthernet5/0/1 1 vpp# set interface l2 bridge TenGigabitEthernet5/0/2 2

- 3. Create, bring up and add vhost-user interfaces to L2 bridges

vpp# create vhost-user socket /var/run/vpp/sock1.sock server

VirtualEthernet0/0/0

vpp# create vhost-user socket /var/run/vpp/sock2.sock server

VirtualEthernet0/0/1

vpp# set interface state VirtualEthernet0/0/0 up

vpp# set interface state VirtualEthernet0/0/1 up

vpp# set interface l2 bridge VirtualEthernet0/0/0 1

vpp# set interface l2 bridge VirtualEthernet0/0/1 2

vpp# show interface

Name Idx State Counter Count

TenGigabitEthernet5/0/1 1 up

TenGigabitEthernet5/0/2 2 up

VirtualEthernet0/0/0 3 up

VirtualEthernet0/0/1 4 up

local0 0 down

- 4. Show resulting bridge setup

vpp# show bridge 1 detail

ID Index Learning U-Forwrd UU-Flood Flooding ARP-Term BVI-Intf

1 1 on on on on off N/A

Interface Index SHG BVI TxFlood VLAN-Tag-Rewrite

TenGigabitEthernet5/0/1 1 0 - * none

VirtualEthernet0/0/0 3 0 - * none

vpp# show bridge 2 detail

ID Index Learning U-Forwrd UU-Flood Flooding ARP-Term BVI-Intf

2 2 on on on on off N/A

Interface Index SHG BVI TxFlood VLAN-Tag-Rewrite

TenGigabitEthernet5/0/2 2 0 - * none

VirtualEthernet0/0/1 4 0 - * none

Launching VM to use VPP connections

taskset 3C0 qemu-system-x86_64 \ -enable-kvm -m 8192 -smp cores=4,threads=0,sockets=1 -cpu host \ -drive file="ubuntu-16.04-server-cloudimg-amd64-disk1.img",if=virtio,aio=threads \ -drive file="seed.img",if=virtio,aio=threads \ -nographic -object memory-backend-file,id=mem,size=8192M,mem-path=/dev/hugepages,share=on \ -numa node,memdev=mem \ -mem-prealloc \ -chardev socket,id=char1,path=/var/run/vpp/sock1.sock \ -netdev type=vhost-user,id=net1,chardev=char1,vhostforce \ -device virtio-net-pci,netdev=net1,mac=00:00:00:00:00:01,csum=off,gso=off,guest_tso4=off,guest_tso6=off,guest_ecn=off,mrg_rxbuf=off \ -chardev socket,id=char2,path=/var/run/vpp/sock2.sock \ -netdev type=vhost-user,id=net2,chardev=char2,vhostforce \ -device virtio-net-pci,netdev=net2,mac=00:00:00:00:00:02,csum=off,gso=off,guest_tso4=off,guest_tso6=off,guest_ecn=off,mrg_rxbuf=off

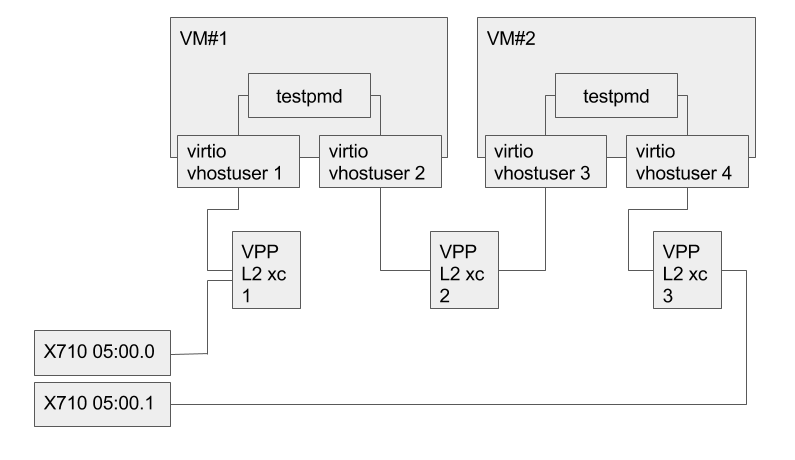

Two Chained QEMU Instances with VPP Vhost-User Interfaces

Taking the prior example further, let's look at chaining two QEMU instances together so we have PHY VM VM PHY with connectivity provided by VPP L2 bridges. Since each bridge has only two interfaces attached to it, an optimization would be to make use of VPP L2 xconnect.

Setup of Networking Components on Host

# bring up the physical endpoints sudo vppctl set interface state TenGigabitEthernet5/0/1 up sudo vppctl set interface state TenGigabitEthernet5/0/2 up sudo vppctl show interfaces # connect each physical interface to an L2 bridge sudo vppctl set interface l2 bridge TenGigabitEthernet5/0/1 1 sudo vppctl set interface l2 bridge TenGigabitEthernet5/0/2 3 # Create and bring up three vhost-user interfaces: sudo vppctl create vhost-user socket /var/run/vpp/sock1.sock server sudo vppctl create vhost-user socket /var/run/vpp/sock2.sock server sudo vppctl create vhost-user socket /var/run/vpp/sock3.sock server sudo vppctl create vhost-user socket /var/run/vpp/sock4.sock server sudo vppctl set interface state VirtualEthernet0/0/0 up sudo vppctl set interface state VirtualEthernet0/0/1 up sudo vppctl set interface state VirtualEthernet0/0/2 up sudo vppctl set interface state VirtualEthernet0/0/3 up #Add virtual interfaces to L2 bridges: sudo vppctl set interface l2 bridge VirtualEthernet0/0/0 1 sudo vppctl set interface l2 bridge VirtualEthernet0/0/1 2 sudo vppctl set interface l2 bridge VirtualEthernet0/0/2 2 sudo vppctl set interface l2 bridge VirtualEthernet0/0/3 3 #Show bridge setup: show bridge-domains 1 detail show bridge-domains 2 detail show bridge-domains 3 detail

Launching VMs to use VPP Connections

- Launch first VM in chain:

taskset 3c000 qemu-system-x86_64 \ -enable-kvm -m 8192 -smp cores=4,threads=0,sockets=1 -cpu host \ -drive file="ubuntu-16.04-server-cloudimg-amd64-disk1.img",if=virtio,aio=threads \ -drive file="seed.img",if=virtio,aio=threads \ -nographic -object memory-backend-file,id=mem,size=8192M,mem-path=/dev/hugepages,share=on \ -numa node,memdev=mem \ -mem-prealloc \ -chardev socket,id=char1,path=/var/run/vpp/sock1.sock \ -netdev type=vhost-user,id=net1,chardev=char1,vhostforce \ -device virtio-net-pci,netdev=net1,mac=00:00:00:00:00:01,csum=off,gso=off,guest_tso4=off,guest_tso6=off,guest_ecn=off,mrg_rxbuf=off \ -chardev socket,id=char2,path=/var/run/vpp/sock2.sock \ -netdev type=vhost-user,id=net2,chardev=char2,vhostforce \ -device virtio-net-pci,netdev=net2,mac=00:00:00:00:00:02,csum=off,gso=off,guest_tso4=off,guest_tso6=off,guest_ecn=off,mrg_rxbuf=off

- Launch second VM in chain:

taskset 3c00 qemu-system-x86_64 \ -enable-kvm -m 8192 -smp cores=4,threads=0,sockets=1 -cpu host \ -drive file="ubuntu-16.04-server-cloudimg-amd64-disk1.img",if=virtio,aio=threads \ -drive file="seed.img",if=virtio,aio=threads \ -nographic -object memory-backend-file,id=mem,size=8192M,mem-path=/dev/hugepages,share=on \ -numa node,memdev=mem \ -mem-prealloc \ -chardev socket,id=char1,path=/var/run/vpp/sock3.sock \ -netdev type=vhost-user,id=net1,chardev=char1,vhostforce \ -device virtio-net-pci,netdev=net1,mac=00:00:00:00:00:03,csum=off,gso=off,guest_tso4=off,guest_tso6=off,guest_ecn=off,mrg_rxbuf=off \ -chardev socket,id=char2,path=/var/run/vpp/sock4.sock \ -netdev type=vhost-user,id=net2,chardev=char2,vhostforce \ -device virtio-net-pci,netdev=net2,mac=00:00:00:00:00:04,csum=off,gso=off,guest_tso4=off,guest_tso6=off,guest_ecn=off,mrg_rxbuf=off